Article continues below

Want to learn more? Check out some of our courses:

Table of Contents

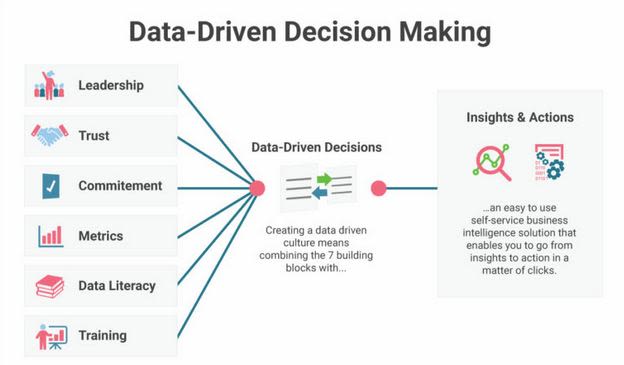

"Intuition" is an over-romanticized term in the current generation. While there’s nothing wrong with going with your gut, doing so may be detrimental for your business. After all, no one wants to create a Highest Paid Person's Opinion (or "HiPPO") culture in their company.

To avoid a HiPPO culture in your office it’s crucial that, once you’ve analyzed your data, you and your team are empowered to make decisions based on your results rather than on one person's opinion.

In this article, I'll discuss when and how to make data-driven decisions to fuel your business's growth. In following articles of Edlitera's series, you can learn more about how to design a data science project as a leader.

But let’s first examine a summary of potential outcomes that can tell you that your data science project is successful:

- You learn something new.

- You use your results to create an impactful final communication product (for example, a report, presentation, or app).

- You learn that your data can’t answer your question.

- You make decisions and new policies based on your results.

- AI for Leaders: A Practical, No-Code Intro to Machine Learning and Deep Learning

- How You Can Build Your Brand and Story as a Data Scientist

Let’s think more on that last point. If taking action based on the results of your data analysis is your goal, you need to design your project for success from the beginning.

For instance, model choice is an important decision at the start of a project. For many projects, active models are better than passive models because they help avoid human decision override. When choosing your model, consider the following qualities of a successful model: look for a model that is as simple as possible, explainable, and properly limited, and when designing your project, don’t only consider the benefits of your model but take a moment (and tap into your paranoid side) to consider how your model may also cause harm if used incorrectly.

- How to Set Up a Machine Learning Project as a Business Leader

- Pitfalls in the Data Science Process: What Could Go Wrong and How to Fix It

Why is an Explainable Algorithm So Important?

Artificial intelligence (AI) tools, like neural networks and deep learning, have working mechanisms that humans may not fully understand. Even so, you must understand what an algorithm is being optimized for in order to successfully use it.

It’s also essential to know why an algorithm made a decision. This is crucial for industries like consumer finance, health care, education, military, and government.

For leaders, the “what” question is fundamental–you need to ask this of the teams that design and build your automated solutions. To better understand the question, let’s consider the Paperclip Maximizer thought experiment proposed by Nick Bostrom in 2003.

The story goes that an AI system is given the goal to manufacture paperclips as efficiently as possible. However, in doing so it transforms the whole earth and space into paperclip manufacturing facilities. In this example, the outlandish results are not technically the AI’s fault, but the fault of the humans who didn’t provide the right goals and constraints to the system.

A similar–but real-life–example is the AI system set to create school and bus schedules in Boston. While the idea seemed like a great way to improve efficiency, the designers received plenty of complaints after implementation from parents whose schedules were not considered because the AI system was too focused on saving money.

Let’s also discuss why it’s important to know why an algorithm makes a decision.

While an AI scientist may understand the technicalities of a model, these same technicalities are still hard to explain to non-experts because machine learning makes decisions based on patterns that defeat human logic and intuition. Because AI’s sophistication makes it sometimes difficult to understand, the public has raised plenty of concerns about the potential negative impact of AI on lives and jobs.

While I don’t think AI will take your job, I do believe it will allow you to take on higher-value tasks at the job you have. For further assurance that your work is safe, data and algorithm regulation in the future will very likely increase AI accountability, and at the same time create incentives to make AI more explainable. As I've discussed, explainable AI enables us to challenge AI-based decisions.

- What Are Eight Critical Steps in the AI Project Life Cycle?

- Human vs. AI: Reasons Why AI Won't (Probably) Take Your Job

There is no doubt that data is a precious tool for businesses. This article has hopefully shown how understanding the “what” and “why” of your algorithm can enable you to make data-driven decisions once you have your results. So the next time you’re faced with a decision to make, you can make it the better way–based on data, not intuition.