In the previous article, we established the foundation of our video editing application. We explained how the preprocessing pipeline extracts audio from videos and how Whisper converts that audio into text. Our helper functions ensure that users can only remove words, without adding or rearranging them. We also explored Streamlit’s execution model, where the script reruns from top to bottom with each interaction. To optimize this process, we discussed key techniques such as caching, callbacks, and session states.

We also explored the conceptual design of our application, examining how the home page, video preview, transcript editor, and warning messages work together to create the perfect user experience. Now, it is time to dive into the actual code implementation.

How to Set Up the Helper Functions

Before diving in, let's examine the helper functions that handle specific tasks within our application. These functions keep our main app code cleaner and more modular. First, let's take a look at the is_port_in_use() and start_flask_server() functions. These are responsible for setting up our Flask server.

def is_port_in_use(port: int):

"""

Check if a given port is in use.

Args:

port (int): Port number to check.

Returns:

bool: True if the port is in use, otherwise False.

"""

with socket.socket(socket.AF_INET, socket.SOCK_STREAM) as s:

return s.connect_ex(("localhost", port)) == 0

def start_flask_server():

"""

Start the Flask server (flask_video_server.py) on port 8000 if it's not already running.

"""

if not is_port_in_use(8000):

# Start the Flask server in the background

server_process = subprocess.Popen(["python", "flask_video_server.py"])

st.session_state["server_process"] = server_process

# Give the server a moment to start

time.sleep(2)

The is_port_in_use() function is quite straightforward. It attempts to connect to a given port on localhost and returns True if the connection succeeds, indicating the port is in use. Otherwise, it returns False. This allows us to determine whether a particular port is available.

The start_flask_server() function relies on the is_port_in_use() function to check whether port 8000, where we want to run our Flask server, is available. If the port is free, it starts the server using Python's subprocess module and stores the process in Streamlit's session state as "server_process" for later reference. It then waits a few seconds to ensure the server has enough time to initialize. Essentially, this function prevents multiple instances of the Flask server from running simultaneously.

The next function to examine is validate_and_process_edit():

def validate_and_process_edit():

"""

Validate the edited transcription, update session states, and refresh

the video player by removing specified time ranges.

"""

original_transcription = st.session_state["original_transcription"]

edited_transcription = st.session_state["transcription"]

transcription_data = st.session_state["transcription_data"]

if is_valid_edit(original_transcription, edited_transcription):

st.session_state["undo_error"] = False

# Update valid transcription and history

st.session_state["latest_valid_transcription"] = edited_transcription

if edited_transcription not in st.session_state["transcription_history"]:

st.session_state["transcription_history"].append(edited_transcription)

# Update the chunks to reflect the removed words

converter = TextToTimestampConverter(transcription_data)

updated_chunks = converter.update_chunks(edited_transcription)

# Gather timestamps that will be removed

timestamps_to_remove = [

chunk["timestamp"]

for chunk in transcription_data["chunks"]

if chunk not in updated_chunks

]

st.session_state["ranges_to_remove"] = timestamps_to_remove

# Prepare playback ranges to highlight removed parts

playback_ranges = prepare_playback_ranges(

transcription_data["chunks"],

timestamps_to_remove

)

# Generate an updated video player

file_name = os.path.basename(st.session_state["video_path"])

base_file_name = os.path.splitext(file_name)[0]

directory_name = f"{base_file_name}_for_editing"

video_url = f"http://localhost:8000/videos/{directory_name}"

videojs_html = generate_videojs_player(video_url, file_name, playback_ranges)

# Center the video in the layout

centered_videojs_html = f"""

<div style="display: flex; justify-content: center; align-items: center; margin-top: 20px;">

{videojs_html}

</div>

"""

v1.html(centered_videojs_html, height=400)

# Hide original player once edited version is displayed

st.session_state["hide_original_player"] = True

else:

# Invalid edit -> revert transcription

if "latest_valid_transcription" in st.session_state:

st.session_state["transcription"] = st.session_state["latest_valid_transcription"]

else:

st.session_state["transcription"] = original_transcription

st.sidebar.error("Invalid edit: Only deletions are allowed.")

This function is triggered whenever the user edits the transcript in the text area (we will discuss setting up the text area later in the article). It first retrieves the original and edited transcriptions from the session state, along with the full transcription data, which includes timestamps.

Next, it checks if the edit is valid using the previously defined is_valid_edit() helper function. This function was introduced in the third article in this series, the one focused on validating text edits and synchronizing text edits with timestamps. To clarify, this function ensures that users can only remove words, but not add or rearrange them.

If the edit is valid, the function:

- Updates the session state to store the latest valid transcription.

- Adds the transcription to the history for undo functionality.

- Creates a converter as an instance of the TextToTimestampConverter class (introduced in the third article of this series) to synchronize text edits with timestamps.

- Uses the converter to convert text transcriptions into timestamps, identifying which parts of the video need to be removed.

- Calculates the timestamps for removal and prepares the playback ranges.

- Generates an updated Video.js player with the new playback ranges.

- Centers the player in the layout using HTML and displays it.

- Hides the original player.

If the edit is invalid, the function reverts the transcription to the last valid version and displays an error message to the user.

Finally, we need to define the undo_last_edit() helper function:

def undo_last_edit():

"""

Undo the last transcription edit by reverting to the previous transcription in history.

"""

# If the latest transcription is not the original, pop it from the history

if st.session_state["transcription_history"][-1] != st.session_state["original_transcription"]:

st.session_state["transcription_history"].pop()

st.session_state["transcription"] = st.session_state["transcription_history"][-1]

st.session_state["undo_error"] = False

validate_and_process_edit()

else:

st.session_state["undo_error"] = True

st.session_state["hide_original_player"] = False

The undo_last_edit() function is a crucial part of our application, as it leverages Streamlit's session state to manage user interactions across reruns. Simply put, it enables users to undo changes made to the transcription. In our app, we associate an array that tracks all valid transcription versions with the transcription_history key in the session state dictionary.

When the user clicks the "Undo Last Edit" button (which will be explained later in the article) the function first checks if the most recent transcription in the history(accessed via transcription_history[-1]) is different from the original one. This comparison determines whether there are any edits to undo.

If there are edits to undo, the function:

- Removes the most recent transcription from the history using pop().

- Sets the current transcription to the previous version, making it the new last item in the history.

- Clears any previous undo error flags (we’ll explain this process later in the article).

- Calls validate_and_process_edit() to update the video player based on the reverted transcription.

This last step is particularly important because it ensures that both the text and the video preview remain synchronized after the undo operation. The validate_and_process_edit() function will recalculate which parts of the video should be removed based on the updated transcription. It will also refresh the Video.js player accordingly.

If there are no more edits to undo (i.e., we've already reached the original transcription), the function:

- Sets the undo_error flag to True, which triggers an error message in the UI.

- Sets hide_original_player to False, which ensures that the original, unedited video is displayed.

This approach allows users to undo edits one by one, in the reverse order they were made.

What Is the Main App Function

Now that all the necessary helper functions have been defined, let's examine the main app function. This one orchestrates the entire Streamlit application. Since the function is quite large, we'll break its analysis into smaller segments.

How to Configure the Page

def video_editing_app():

"""

Main Streamlit app for editing videos by removing words from the transcribed text.

"""

st.set_page_config(

page_title="Video Editing App",

layout="wide",

initial_sidebar_state="expanded"

)

# Hide Streamlit's default UI elements (menu, footer, etc.)

hide_streamlit_style = """

<style>

#MainMenu {visibility: hidden;}

footer {visibility: hidden;}

.stStatusWidget {display: none;}

header {visibility: hidden;}

</style>

"""

st.markdown(hide_streamlit_style, unsafe_allow_html=True)

The function begins by configuring the page using st.set_page_config() function. With this function, we can define the title of our page, choose the type of layout, and ensure that the sidebar is expanded by default. This is crucial, since much of the app's functionality will be located in the sidebar.

Next, we add custom CSS to hide specific default UI elements in Streamlit, creating a cleaner and more focused interface. These default UI elements can interfere with the custom widgets we will create for our app. Therefore, removing them improves the overall user experience.

Next, we set up the sidebar.

# Sidebar layout

st.sidebar.markdown(

"""

<div style="text-align: center; margin-bottom: 20px;">

<img src="https://edlitera-images.s3.amazonaws.com/new_edlitera_logo.png" width="150"/>

</div>

<div style="text-align: center;">Edit your video by removing words from it!</div>

""",

unsafe_allow_html=True

)

This code creates a centered logo and a tagline in the sidebar. We use st.sidebar.markdown() with the unsafe_allow_html=True parameter to enable HTML formatting. This allows us to have more control over the appearance and layout.

Before proceeding to create the widget for uploading a video, we first check whether the Flask server is running. This is done because the app depends on the server to display videos.

# Ensure Flask server is running

if "server_process" not in st.session_state:

start_flask_server()

Finally, after confirming that the Flask server is running, we can proceed and create the file uploader widget.

# File uploader

uploaded_file = st.sidebar.file_uploader(

"Upload your file here",

type=["mp4", "mov", "avi"],

label_visibility="hidden"

)

This widget appears in the sidebar, allowing users to upload video files in MP4, MOV, or AVI format. Since we already have a descriptive tagline above, the label for the uploader is hidden to avoid redundancy. After creating the widget, we need to define the behaviour for when a file is uploaded.

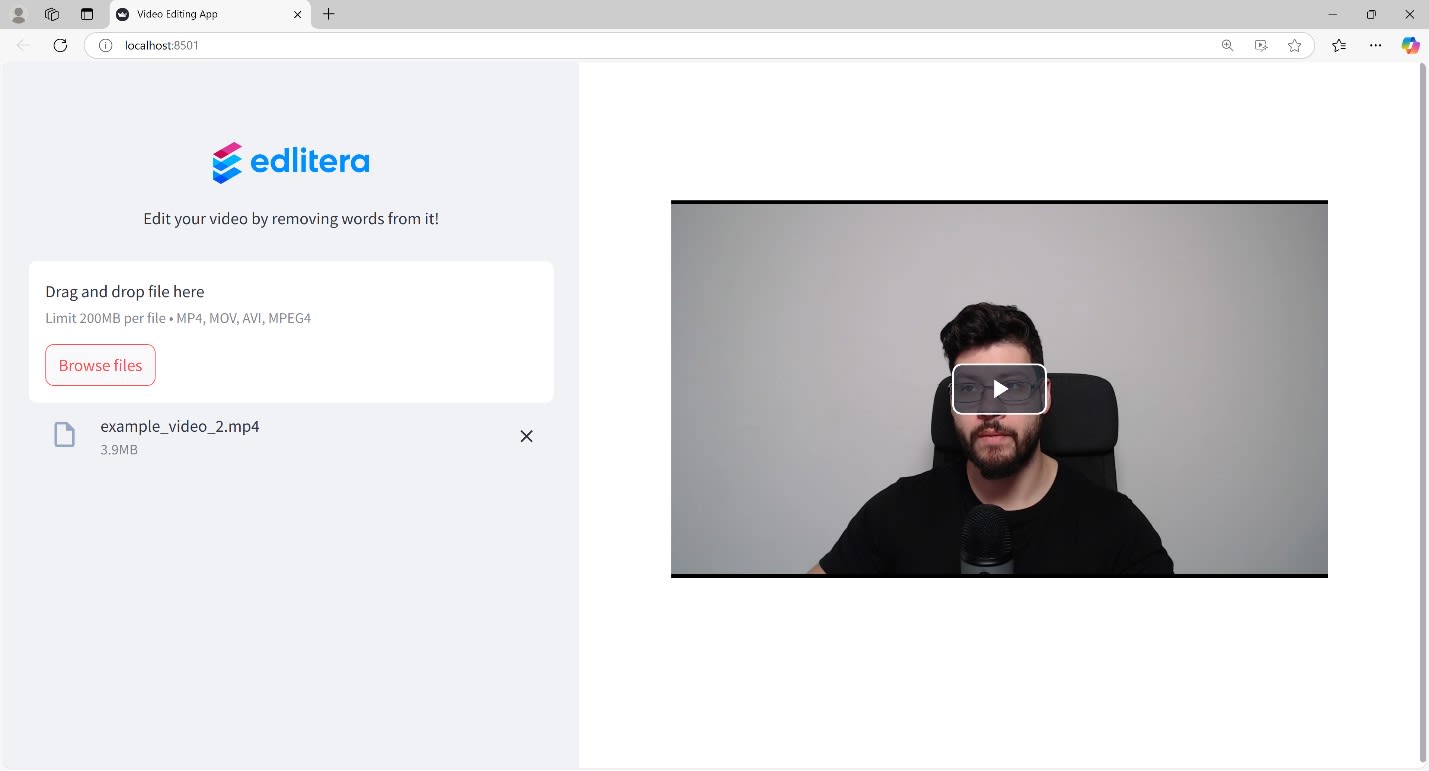

Based on the code above, the following page will be generated in Streamlit:

Article continues below

Want to learn more? Check out some of our courses:

How to Handle File Uploads

The rest of the main function handles what happens after a file is uploaded. First, the code creates a directory structure to store the video and related files. Next, it checks if this is a new upload by comparing the file path to what is currently stored in the session state. If it is a new upload, the code writes the file to disk and resets all relevant session state variables.

if uploaded_file:

file_name = uploaded_file.name

base_file_name = os.path.splitext(file_name)[0]

directory_name = f"{base_file_name}_for_editing"

directory_path = os.path.join(os.getcwd(), "videos", directory_name)

os.makedirs(directory_path, exist_ok=True)

video_path = os.path.join(directory_path, file_name)

# If a new or different file is uploaded, reset state

if "video_path" not in st.session_state or st.session_state["video_path"] != video_path:

with open(video_path, "wb") as f:

f.write(uploaded_file.getbuffer())

st.session_state["video_path"] = video_path

st.session_state["original_transcription"] = None

st.session_state["transcription"] = None

st.session_state["transcription_data"] = None

st.session_state["latest_valid_transcription"] = None

st.session_state["hide_original_player"] = False

st.session_state["transcription_history"] = []

st.session_state["undo_error"] = False

However, the most critical aspect of this code section is managing Streamlit's session state. When a new file is uploaded, the app's state resets. In effect, we will initialize several session state variables that we use throughout the app:

- st.session_state["video_path"]: Stores the path to the current video. This is used for file operations and to check if a new video has been uploaded.

- st.session_state["original_transcription"]: Stores the original text transcription generated by Whisper, serving as the immutable baseline for the video.

- st.session_state["transcription"]: Maintains the current working version of the transcription, which users can edit. This is directly linked to the text area widget in the UI.

- st.session_state["transcription_data"]: Contains the complete transcription data from Whisper, including text and timestamp details for each word or segment.

- st.session_state["latest_valid_transcription"]: Tracks the most recent valid edit.

- st.session_state["hide_original_player"]: Controls whether the original video player is displayed or replaced with the edited preview.

- st.session_state["transcription_history"]: Stores a history of all valid edits, enabling the undo functionality.

- st.session_state["undo_error"]: A flag that triggers an error message when users attempt to undo changes but have already reverted to the original transcription.

This complete reset of session state variables guarantees that our app starts with a clean slate whenever a new video is uploaded. Each variable serves a distinct purpose, from tracking edits to managing UI elements.

How to Validate the Video File

Next, we validate the uploaded video:

# Validate video

try:

with VideoFileClip(video_path) as clip:

_ = clip.duration

valid_video = True

except Exception:

st.sidebar.error("Invalid video file. Please upload a valid video.")

valid_video = False

if valid_video:

video_url = f"http://localhost:8000/videos/{directory_name}"

# Show original player if not hidden

if not st.session_state["hide_original_player"]:

videojs_html = generate_videojs_player(video_url, file_name)

centered_videojs_html = f"""

<div style="display: flex; justify-content: center; align-items: center; margin-top: 20px;">

{videojs_html}

</div>

"""

v1.html(centered_videojs_html, height=400)

This utilizes MoviePy's VideoFileClip to verify the uploaded file's validity by attempting to retrieve its duration. If the attempt fails, an error message is displayed, and valid_video is set to False. If the video is valid, the process continues with displaying the video and managing the transcription. Specifically, the code generates a URL for the video to be served by the Flask server. In addition, it ensures that the original video player is displayed unless it has been replaced by an edited version.

The code above will generate a player that looks like this:

At this point, however, we have yet to cover the part of our app responsible for generating the transcription. This is what we will focus on next.

How to Transcribe the Video and Display the Transcription in a Text Area

Next, we need to transcribe the video, and store the transcription in a text area that can be edited. To transcribe the video, we use the following code:

# Transcribe if no transcription is stored

if st.session_state.get("original_transcription") is None:

preprocessor = Preprocessor(video_path)

preprocessor.extract_audio()

# Transcription

# Optionally use WhisperTimestamped() if needed

whisper_transcriber = WhisperLargeTurbo(language="en")

transcriber = Transcriber(whisper_transcriber)

audio_path = os.path.join(directory_path, f"{base_file_name}_audio.wav")

transcription = transcriber.transcribe(audio_path)

transcription_text = transcription["text"]

st.session_state["original_transcription"] = transcription_text

st.session_state["transcription"] = transcription_text

st.session_state["transcription_data"] = transcription

st.session_state["latest_valid_transcription"] = transcription_text

st.session_state["transcription_history"].append(transcription_text)

If no transcription is stored in the session state, we run our preprocessing pipeline to extract audio from the video. Next, we use our transcription pipeline with the WhisperLargeTurbo model to transcribe it. The resulting transcription and its text are then stored in the session state for use throughout the app. To recap, we defined the preprocessing pipeline and transcription models in the second article of this series.

After creating the transcription, we need to create a widget in Streamlit to store and display it. The ideal widget for this purpose is the text_area widget. It creates a text area where the transcription is displayed, and allows users to modify the text.

Additionally, it allows users to run functions based on changes to the text. We will use this widget to display the transcription and update the video player when the user makes changes. Unfortunately, there is no way to limit the user to only removing words. This is why we created the validate_and_process_edit() function earlier.

We can create the text area using the following code:

# Sidebar text area for editing transcription

st.sidebar.text_area(

"Edit transcription (deletions only):",

key="transcription",

height=300,

on_change=validate_and_process_edit

)

This seemingly simple code snippet creates the most crucial interface element in our entire video editing application. By using st.sidebar.text_area(), we position this editing interface in the sidebar rather than the main content area. This layout choice creates a clear visual distinction between viewing content (main area) and editing tools (sidebar).

The label assigned to this text area is "Edit transcription (deletions only)". Even though, as mentioned previously, we can't prevent users from making changes beyond deleting parts of the transcription, this instruction serves as a guideline. At the very least, it informs unfamiliar users about the constraints of our editing system.

The key="transcription" parameter is particularly powerful because it creates a two-way binding between the widget and the session state variable of the same name:

- When the application first loads a video and generates a transcription, st.session_state["transcription"] is set to the transcription text.

- This value automatically populates the text area.

- When users edit the text, those changes automatically update st.session_state["transcription"].

- If the code programmatically updates st.session_state["transcription"], for instance during an undo operation, the text area content will immediately reflect that change.

This two-way binding creates a seamless user experience by ensuring that the text area always reflects the current transcription state. Whether the user edits the text directly or the application updates it through functions like undo, the changes are instantly displayed.

Finally, we need to define the callback mechanism. The on_change parameters allows us to specify which function should run whenever a user changes the text in the text area. We will use the validate_and_process_edit() function created earlier. By running this function whenever the user modifies the text, even by a single character, we automatically verify its validity.

If the edit is valid, we provide immediate visual feedback in the form of a video with the corresponding segments removed. Because of the way we set up the validate_and_process_edit() function earlier, any valid edits are added to the transcription history, enabling the undo functionality. Conversely, if the edit is invalid, the text reverts to the last known valid version.

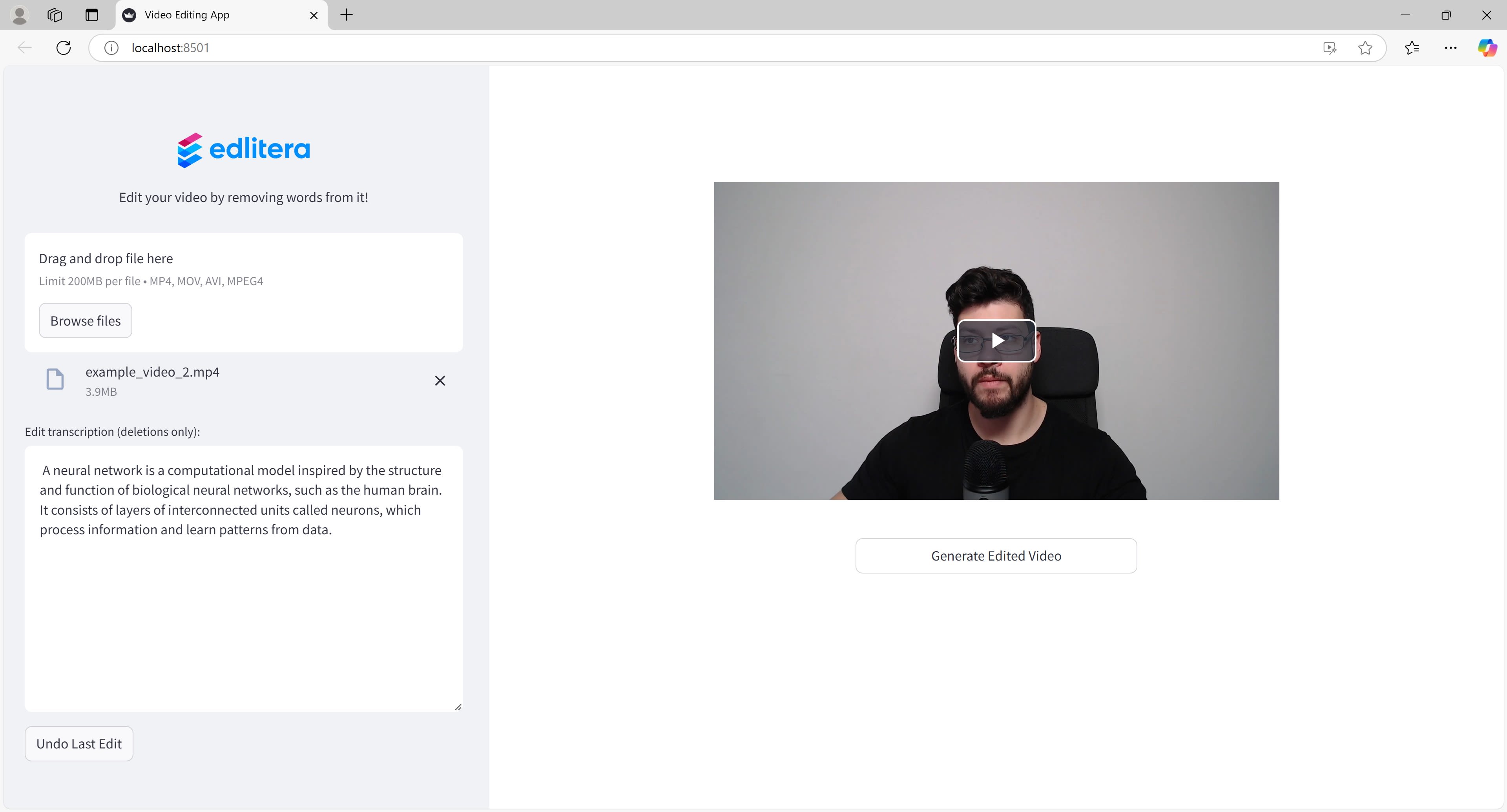

Our screen, displaying a transcription within the text area, will appear as follows:

As can be seen, our code generates two additional buttons. Let's explore their functionality next.

What Is the "Undo Last Edit" Button

st.sidebar.button("Undo Last Edit", on_click=undo_last_edit)

error_placeholder = st.sidebar.empty()

if st.session_state["undo_error"] is True:

error_placeholder.error("No edits to undo")

This block of code generates the undo button below the text area. By using the st.sidebar.button() function we generate a button widget. We can choose what to display inside of that button, which in our case is "Undo Last Edit". Moreover, similar to the text area, we can define a callback function that will run when the user clicks the button. Here, the callback is the undo_last_edit() function, which handles the logic of reverting to the previous version of the transcription.

The undo functionality is implemented inside the first line of this block of code. The next three lines handle error management. To be more precise, by using st.sidebar.empty() we reserve a space in the UI where content can be displayed conditionally. Initially, this placeholder is empty and takes up no visual space because the session state value for "undo_error" is set to False by default.

If the user clicks the undo button, and the undo_last_edit() function determines that there are no more edits to undo, it means that we've reached the original transcription. In this case, the session state value changes to True. When that happens, an error message is displayed in the space we reserved earlier using st.sidebar.empty().

What Is the "Generate Edited Video" Button

Finally, we need to create a block of code that generates a button, allowing users to click and produce the final edited video.

# Button to generate edited video

left, middle, right = st.columns(3)

button_placeholder = middle.empty()

if button_placeholder.button("Generate Edited Video", use_container_width=True):

if st.session_state.get("ranges_to_remove"):

output_file_name = "edited_video.mp4"

output_path = os.path.join(directory_path, output_file_name)

button_placeholder.empty()

st.session_state["hide_original_player"] = True

# Spinner during processing

spinner_html = """

<div style="display: flex; justify-content: center; align-items: center; height: 200px;">

<div class="loader"></div>

</div>

<style>

.loader {

border: 16px solid #f3f3f3;

border-radius: 50%;

border-top: 16px solid #3498db;

width: 120px;

height: 120px;

animation: spin 2s linear infinite;

}

@keyframes spin {

0% { transform: rotate(0deg); }

100% { transform: rotate(360deg); }

}

</style>

"""

spinner_placeholder = st.empty()

spinner_placeholder.markdown(spinner_html, unsafe_allow_html=True)

try:

editor = VideoEditor(st.session_state["video_path"])

editor.remove_parts(st.session_state["ranges_to_remove"], output_path)

finally:

spinner_placeholder.empty()

st.video(output_path)

else:

st.session_state["hide_original_player"] = False

middle.warning("Video hasn't been modified.")

Creating a button here and making sure it is positioned exactly where we want it is somehow more complex. Streamlit's layout system operates with columns, so we first need to define the width of the section beneath the player. By using st.columns(3) we divide the available width into three equal columns. We then capture the column objects as left, middle, and right. Next, we create an empty placeholder in the middle column, where our button will be placed. This will center our button horizontally in the page's main content area.

The conditional statement that follows creates a button within the placeholder, labeled "Generate Edited Video." It also ensures that the button expands to fill the column width, making it more visually prominent.

The button is placed within a conditional statement, ensuring that the indented code executes only when it is clicked. The button will be displayed as soon as the original video is transcribed. However, its function depends on the current session state, leading to different outcomes upon clicking.

If the current transcription in the text area is the original, clicking the button will trigger a warning, informing us that we are still working with the original video.

If the current transcription in the text area is not the original transcription, the session state will track which parts of the original video need to be removed to produce the final edited version. Essentially, when we edit the transcription and click the button, the code will do the following:

- Set up the output path for the edited video.

- Hide the original video player.

- Display a spinner animation to indicate processing.

- Use the VideoEditor to remove the specified parts from the video.

- Clear the spinner and display the edited video using Streamlit's st.video().

How the Components Work Together

Now that each part of the code has been examined, let's take a step back and see how these components work together to create a cohesive application:

- When the app starts, it sets up the page configuration and checks if the Flask server is running, starting it if needed.

- Users see a clean interface with a logo, tagline, and file upload widget in the sidebar.

- When a video is uploaded, the app:

- Creates a directory structure for the files.

- Validates the video.

- Displays the original video using Video.js.

- Preprocesses the video to extract audio.

- Transcribes the audio using Whisper.

- Displays the transcription in an editable text area.

- As users edit the transcription, the app:

- Validates the edit to ensure only deletions are made.

- Updates the session state with the new transcription.

- Records the edit in history for undo functionality.

- Calculates which parts of the video to remove.

- Updates the video player to preview the edits.

- If users click "Undo Last Edit," the app:

- Retrieves the previous transcription from history.

- Updates the text area and video preview.

- Displays an error if there are no edits to undo.

- Finally, when users click "Generate Edited Video," the app:

- Shows a spinner to indicate processing.

- Uses VideoEditor to remove the specified parts from the video.

- Displays the final edited video.

The app leverages Streamlit's reactive execution model, which reruns the script from top to bottom with each user interaction. It utilizes session states to preserve data between reruns, ensuring that the application runs smoothly despite frequent refreshes.

Below is the complete code for the app, encompassing the entire script:

import os

import socket

import subprocess

import time

import streamlit as st

from streamlit.components import v1

from moviepy.editor import VideoFileClip

from video_editing.video_editing_pipeline import VideoEditor

from preprocessing.preprocessing_pipeline import Preprocessor

from transcribing.transcription_pipeline import (

Transcriber,

WhisperLargeTurbo,

WhisperTimestamped,

)

from helper_functions.helper_functions import (

generate_videojs_player,

is_valid_edit,

prepare_playback_ranges,

TextToTimestampConverter,

)

def is_port_in_use(port: int):

"""

Check if a given port is in use.

Args:

port (int): Port number to check.

Returns:

bool: True if the port is in use, otherwise False.

"""

with socket.socket(socket.AF_INET, socket.SOCK_STREAM) as s:

return s.connect_ex(("localhost", port)) == 0

def start_flask_server():

"""

Start the Flask server (flask_video_server.py) on port 8000 if it's not already running.

"""

if not is_port_in_use(8000):

# Start the Flask server in the background

server_process = subprocess.Popen(["python", "flask_video_server.py"])

st.session_state["server_process"] = server_process

# Give the server a moment to start

time.sleep(2)

def validate_and_process_edit():

"""

Validate the edited transcription, update session states, and refresh

the video player by removing specified time ranges.

"""

original_transcription = st.session_state["original_transcription"]

edited_transcription = st.session_state["transcription"]

transcription_data = st.session_state["transcription_data"]

if is_valid_edit(original_transcription, edited_transcription):

st.session_state["undo_error"] = False

# Update valid transcription and history

st.session_state["latest_valid_transcription"] = edited_transcription

if edited_transcription not in st.session_state["transcription_history"]:

st.session_state["transcription_history"].append(edited_transcription)

# Update the chunks to reflect the removed words

converter = TextToTimestampConverter(transcription_data)

updated_chunks = converter.update_chunks(edited_transcription)

# Gather timestamps that will be removed

timestamps_to_remove = [

chunk["timestamp"]

for chunk in transcription_data["chunks"]

if chunk not in updated_chunks

]

st.session_state["ranges_to_remove"] = timestamps_to_remove

# Prepare playback ranges to highlight removed parts

playback_ranges = prepare_playback_ranges(

transcription_data["chunks"],

timestamps_to_remove

)

# Generate an updated video player

file_name = os.path.basename(st.session_state["video_path"])

base_file_name = os.path.splitext(file_name)[0]

directory_name = f"{base_file_name}_for_editing"

video_url = f"http://localhost:8000/videos/{directory_name}"

videojs_html = generate_videojs_player(video_url, file_name, playback_ranges)

# Center the video in the layout

centered_videojs_html = f"""

<div style="display: flex; justify-content: center; align-items: center; margin-top: 20px;">

{videojs_html}

</div>

"""

v1.html(centered_videojs_html, height=400)

# Hide original player once edited version is displayed

st.session_state["hide_original_player"] = True

else:

# Invalid edit -> revert transcription

if "latest_valid_transcription" in st.session_state:

st.session_state["transcription"] = st.session_state["latest_valid_transcription"]

else:

st.session_state["transcription"] = original_transcription

st.sidebar.error("Invalid edit: Only deletions are allowed.")

def undo_last_edit() -> None:

"""

Undo the last transcription edit by reverting to the previous transcription in history.

"""

# If the latest transcription is not the original, pop it from the history

if st.session_state["transcription_history"][-1] != st.session_state["original_transcription"]:

st.session_state["transcription_history"].pop()

st.session_state["transcription"] = st.session_state["transcription_history"][-1]

st.session_state["undo_error"] = False

validate_and_process_edit()

else:

st.session_state["undo_error"] = True

st.session_state["hide_original_player"] = False

def video_editing_app() -> None:

"""

Main Streamlit app for editing videos by removing words from the transcribed text.

"""

st.set_page_config(

page_title="Video Editing App",

layout="wide",

initial_sidebar_state="expanded"

)

# Hide Streamlit's default UI elements (menu, footer, etc.)

hide_streamlit_style = """

<style>

#MainMenu {visibility: hidden;}

footer {visibility: hidden;}

.stStatusWidget {display: none;}

header {visibility: hidden;}

</style>

"""

st.markdown(hide_streamlit_style, unsafe_allow_html=True)

# Sidebar layout

st.sidebar.markdown(

"""

<div style="text-align: center; margin-bottom: 20px;">

<img src="https://edlitera-images.s3.amazonaws.com/new_edlitera_logo.png" width="150"/>

</div>

<div style="text-align: center;">Edit your video by removing words from it!</div>

""",

unsafe_allow_html=True

)

# Ensure Flask server is running

if "server_process" not in st.session_state:

start_flask_server()

# File uploader

uploaded_file = st.sidebar.file_uploader(

"Upload your file here",

type=["mp4", "mov", "avi"],

label_visibility="hidden"

)

if uploaded_file:

file_name = uploaded_file.name

base_file_name = os.path.splitext(file_name)[0]

directory_name = f"{base_file_name}_for_editing"

directory_path = os.path.join(os.getcwd(), "videos", directory_name)

os.makedirs(directory_path, exist_ok=True)

video_path = os.path.join(directory_path, file_name)

# If a new or different file is uploaded, reset state

if "video_path" not in st.session_state or st.session_state["video_path"] != video_path:

with open(video_path, "wb") as f:

f.write(uploaded_file.getbuffer())

st.session_state["video_path"] = video_path

st.session_state["original_transcription"] = None

st.session_state["transcription"] = None

st.session_state["transcription_data"] = None

st.session_state["latest_valid_transcription"] = None

st.session_state["hide_original_player"] = False

st.session_state["transcription_history"] = []

st.session_state["undo_error"] = False

# Validate video

try:

with VideoFileClip(video_path) as clip:

_ = clip.duration

valid_video = True

except Exception:

st.sidebar.error("Invalid video file. Please upload a valid video.")

valid_video = False

if valid_video:

video_url = f"http://localhost:8000/videos/{directory_name}"

# Show original player if not hidden

if not st.session_state["hide_original_player"]:

videojs_html = generate_videojs_player(video_url, file_name)

centered_videojs_html = f"""

<div style="display: flex; justify-content: center; align-items: center; margin-top: 20px;">

{videojs_html}

</div>

"""

v1.html(centered_videojs_html, height=400)

# Transcribe if no transcription is stored

if st.session_state.get("original_transcription") is None:

preprocessor = Preprocessor(video_path)

preprocessor.extract_audio()

# Transcription

# Optionally use WhisperTimestamped() if needed

whisper_transcriber = WhisperLargeTurbo(language="en")

transcriber = Transcriber(whisper_transcriber)

audio_path = os.path.join(directory_path, f"{base_file_name}_audio.wav")

transcription = transcriber.transcribe(audio_path)

transcription_text = transcription["text"]

st.session_state["original_transcription"] = transcription_text

st.session_state["transcription"] = transcription_text

st.session_state["transcription_data"] = transcription

st.session_state["latest_valid_transcription"] = transcription_text

st.session_state["transcription_history"].append(transcription_text)

# Sidebar text area for editing transcription

st.sidebar.text_area(

"Edit transcription (deletions only):",

key="transcription",

height=300,

on_change=validate_and_process_edit

)

st.sidebar.button("Undo Last Edit", on_click=undo_last_edit)

error_placeholder = st.sidebar.empty()

if st.session_state["undo_error"] is True:

error_placeholder.error("No edits to undo")

# Button to generate edited video

left, middle, right = st.columns(3)

button_placeholder = middle.empty()

if button_placeholder.button("Generate Edited Video", use_container_width=True):

if st.session_state.get("ranges_to_remove"):

output_file_name = "edited_video.mp4"

output_path = os.path.join(directory_path, output_file_name)

button_placeholder.empty()

st.session_state["hide_original_player"] = True

# Spinner during processing

spinner_html = """

<div style="display: flex; justify-content: center; align-items: center; height: 200px;">

<div class="loader"></div>

</div>

<style>

.loader {

border: 16px solid #f3f3f3;

border-radius: 50%;

border-top: 16px solid #3498db;

width: 120px;

height: 120px;

animation: spin 2s linear infinite;

}

@keyframes spin {

0% { transform: rotate(0deg); }

100% { transform: rotate(360deg); }

}

</style>

"""

spinner_placeholder = st.empty()

spinner_placeholder.markdown(spinner_html, unsafe_allow_html=True)

try:

editor = VideoEditor(st.session_state["video_path"])

editor.remove_parts(st.session_state["ranges_to_remove"], output_path)

finally:

spinner_placeholder.empty()

st.video(output_path)

else:

st.session_state["hide_original_player"] = False

middle.warning("Video hasn't been modified.")

if __name__ == "__main__":

video_editing_app()

In this final article of our series on building a video editing app with Python, we bring together all the components we’ve developed throughout the series. The result is a powerful yet user-friendly application that allows users to edit videos by simply removing words from the transcription. The app provides immediate visual feedback through a Video.js player. Once users are satisfied with their edits, they can generate the final edited video with a simple click.

This video editing approach is particularly useful for content creators, educators, and anyone looking to make quick edits to videos without the need for complex editing software. By leveraging the power of transcription and synchronizing it with video timestamps, we've created a unique and innovative approach to video editing.