MLOps, or Machine Learning Operations, is a new term for many but the concept has existed for some time. MLOps is the method of using DevOps and machine learning to automate machine learning applications. By shortening a system’s developmental life cycle, MLOps provides continuous high-quality delivery. As larger amounts of data became available, companies saw the potential in utilizing MLOps to improve their services. With the increase in demand for machine learning and data scientists to streamline production development, organizations focused next on how to make data-heavy machine learning models more efficient in a production environment.

As MLOps became more mainstream, many companies wanted to get started with MLOps as fast as possible. The ones that found the most success started practicing MLOps using managed services available in the cloud. Managed services can serve as an entryway to MLOps, or even a long-term solution depending on the organization’s needs. An example of managed MLOps services are those offered by AWS.

Amazon Web Services, or AWS, has numerous services dedicated specifically to MLOps, such as the Amazon SageMaker service. Using AWS, an organization that utilizes cloud platforms can easily start to implement MLOps practices in order to make managing the machine learning lifecycle easier. This article will take a deep dive into AWS services connected to MLOps and demonstrate how they can be used.

Benefits of AWS for MLOps

Before explaining AWS, one needs to understand the function of cloud computing. When it first arrived, cloud computing was a revolutionary idea. Instead of managing their own computing infrastructure, companies could pay a cloud service provider to manage it for them. On a basic level, cloud computing works as a type of virtual platform. A large network of remote servers is available on-demand for companies to rent in order to store and retrieve data. This means that companies do not need to set up and maintain an on-premise infrastructure in order to store and access data, and instead can focus on other aspects of their work for greater productivity. Cloud computing leads to benefits in terms of:

• Speed - Resources and services are available immediately.

• Accessibility - Data, resources, and services can be accessed easily if the user is connected to the internet.

• Cost - Removes the need to spend money on hardware that could become outdated.

• Scalability - Able to upscale or downscale work easily to adapt to the needs of the company.

• Security - Secures data with encryptions, keeping the data safe and accessible.

One such cloud computing platform is AWS (Amazon Web Services). The services AWS offers can be roughly separated into three main models:

• IaaS - Infrastructure as a service

• PaaS - Platform as a service

• SaaS - Software as a service

IaaS Model

The IaaS model allows users access to different features such as:

• Networking features

• Virtual computers or those on dedicated hardware

• Data storage space

A company using the IaaS model will have all the building blocks necessary to create a flexible system for managing its IT resources according to industry standards.

PaaS Model

The PaaS model provides the user with a completed platform. The PaaS model is a finished platform, providing infrastructure so users can focus on deployment and application management. PaaS models make it easier to manage the complicated processes and infrastructure needed to run an application.

SaaS Model

The SaaS model provides a completed product. This means that the only thing the user needs to worry about is how to use the particular application most effectively. It is easily the simplest model, but the least flexible, since the user can't change how the product functions on a basic level.

Article continues below

Want to learn more? Check out some of our courses:

How to Use AWS for MLOps

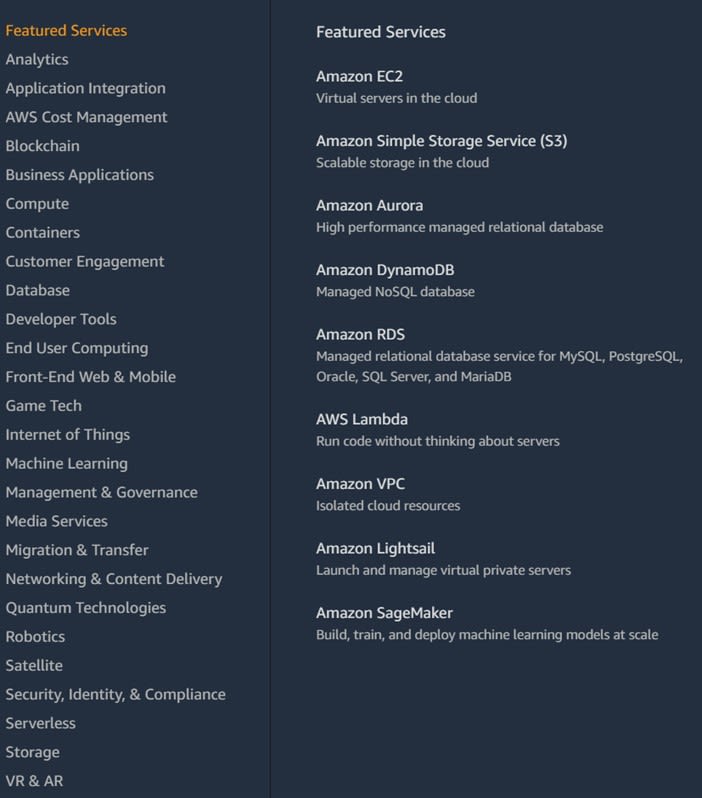

Image Source: Featured Services on AWS, https://aws.amazon.com

Now that we know how exactly AWS functions, let's explain and demonstrate the services that can be used for MLOps. We will start by naming the different AWS services that are used to build MLOps workflows:

• AWS CodeCommit

• Amazon SageMaker

• AWS CodePipeline

• AWS CodeBuild

• AWS CloudFormation

• Amazon S3

• AWS CloudWatch

Data engineering is also an important part of MLOps, but AWS services for specialized data engineering tasks are outside of the scope of this article. For the purposes of this article, we will assume if you are interested in MLOps, you already have some type of Extract, Transform, and Load (ETL) procedure in place. If we wanted to set up an ETL procedure using AWS, we could use the serverless ETL service provided by AWS called AWS Glue. There is, however, one service not mentioned in the list above, and that is AWS CodeStar. CodeStar is a special service that merely provides a dashboard to manage all the other services in your MLOps setup. We will cover CodeStar in detail later in this article.

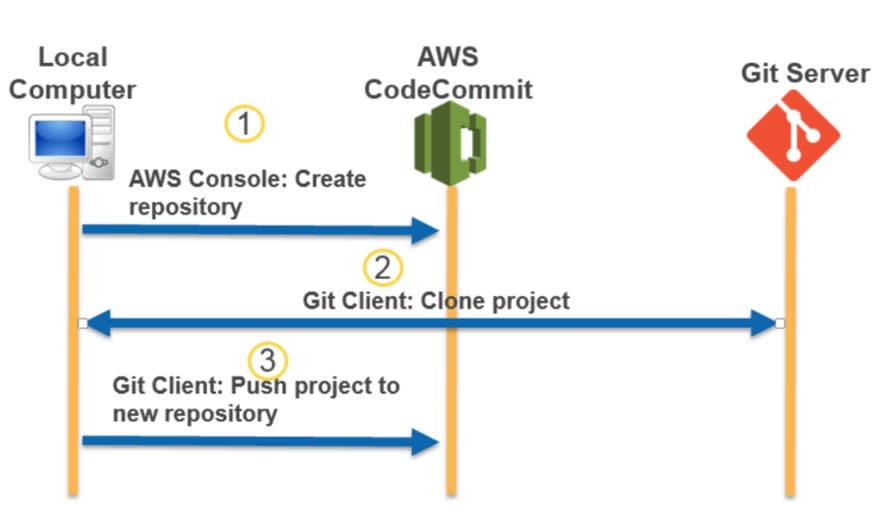

AWS CodeCommit

CodeCommit is a crucial AWS service. It makes collaboration between team members easier by providing secure Git-based repositories. It functions as a tool for version control, which allows users to easily create and manage their Git repositories. CodeCommit is very simple to use since it is a fully managed service. This allows the user to focus on their work and not on operating the system or scaling its infrastructure. Because it integrates easily with other Git tools, it is the perfect option for teams that have multiple members working on the same code.

Image Source: Migrating a Git Repository to CodeCommit, https://docs.aws.amazon.com/codecommit/latest/userguide/how-to-migrate-repository-existing.html

AWS SageMaker

Similar to CodeCommit, AWS SageMaker is also a fully managed service. It makes building, training, and deploying models very simple. This allows data scientists and machine learning engineers to focus on making better models. High-quality workflow takes time due to the differences between machine learning models and standard programs. These workflows are much harder to create and require several tools to build. Amazon SageMaker has everything needed for creating a smooth workflow. It is a service that frequently gets updated with new, useful features. For instance, these relatively recent features further improve the existing structure of SageMaker and make managing the MLOps workflow easier:

• SageMaker Data Wrangler

• SageMaker Feature Store

• SageMaker Clarify

• SageMaker JumpStart

• Distributed Training

• SageMaker Debugger

• SageMaker Edge Manager

• SageMaker Pipelines

This large repertoire of tools is what differentiates SageMaker from other services. It not only meets the basic needs of most data scientists and machine learning engineers but, with added services like Amazon SageMaker, Ground Truth, and Amazon Augmented AI, SageMaker rises above the competition as the most versatile machine learning service currently available.

An especially useful part of SageMaker is SageMaker Studio. It is an IDE offered by SageMaker to make the process of creating, training, tuning, and deploying models more manageable by using a visual interface. It can be used to create notebooks, experiments, models, and much more.

Image Source: Amazon SageMaker Studio’s Visual Interface, https://aws.amazon.com/sagemaker/

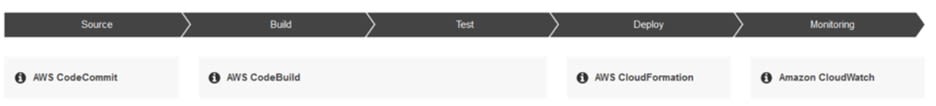

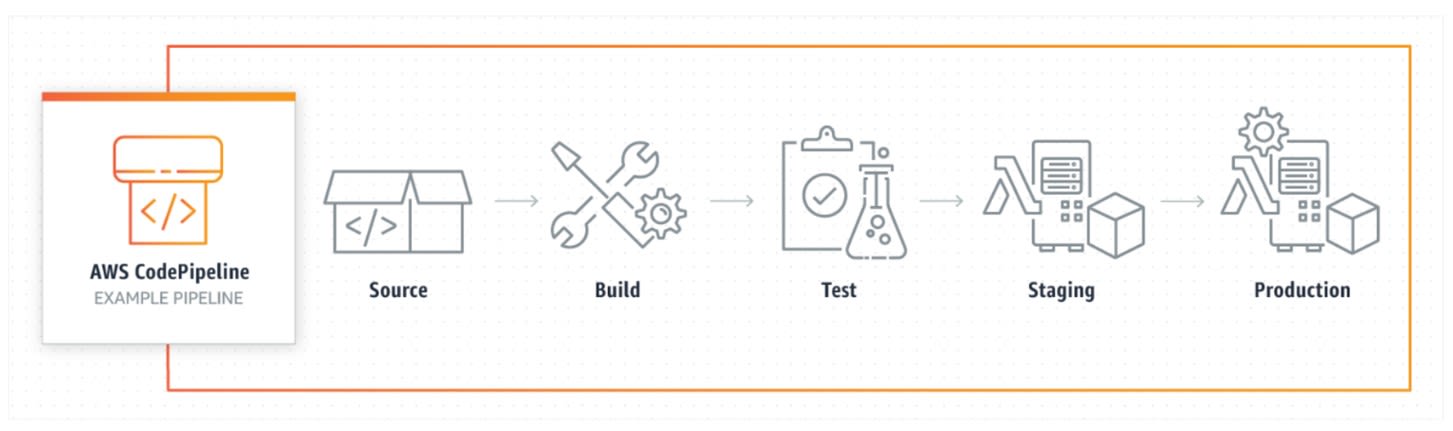

AWS CodePipeline

Continuing with the trend of the previous MLOps services, AWS CodePipeline is also fully managed. It is used for creating end-to-end pipelines that allow users to automate the different phases of the release process of their models. Choosing CodePipeline as the continuous delivery service allows users to adapt to needs quickly and swiftly deliver features and updates. This speed of delivery becomes especially useful if the machine learning model being used gets updated more often. CodePipeline integrates easily with other services, even those that are not part of the services offered by AWS. It can also be integrated with custom, user-created plugins. One of the most underrated features of CodePipeline is the visualizations it offers which simplifies monitoring and tracking processes.

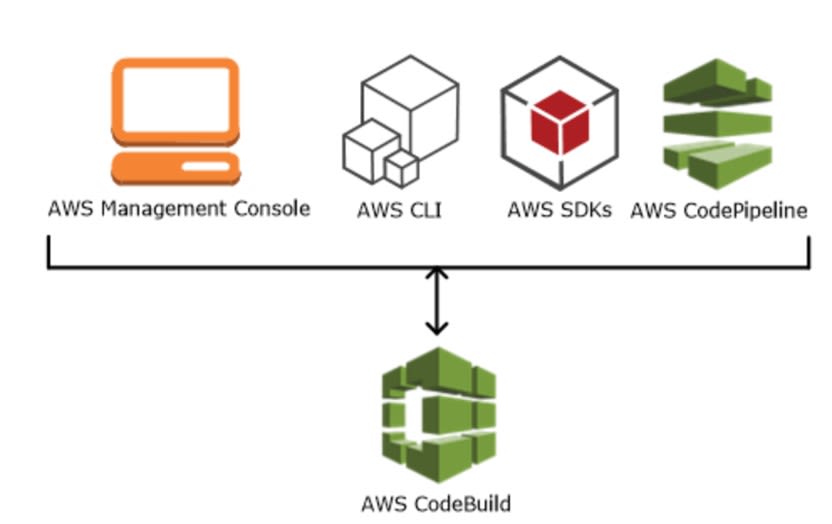

AWS CodeBuild

CodeBuild is an important and fully managed continuous integration service. By combining it with CodePipeline, users can build high-quality CI/CD pipelines using AWS. CodeBuild allows users to create finished software packages after running source code and performing tests. As a service, it is flexible and can be scaled to running multiple builds at the same time. It is also very easy to use. To start using CodeBuild, users can either select a custom-made or prepackaged environment.

CodeBuild is often integrated with AWS Identity and Access Management (IAM). This serves as a layer of protection for the user. By using IAM, the user can easily define which individuals can have access to projects.

Image Source: Different Ways of Running CodeBuild, https://www.cloudsavvyit.com/3398/how-to-get-started-with-codebuild-awss-automated-build-service/

AWS CodeStar

AWS CodeStar is unique because it doesn't bring something new to an MLOps stack. Rather, it serves as a UI that makes tracking and managing other services easier. With AWS CodeStar, users can cover the DevOps part of their system. CodeStar projects integrate CodeBuild, CodeDeploy, CodeCommit, and CodePipeline. CodeStar has four main parts:

• Project Template - Provides different templates for various project types and multiple program languages.

• Project Access - A simple tool to manage team members’ access depending on their role. The defined permissions carry on through all AWS services that are being utilized.

• Dashboard - Gives an overall view of the project. It tracks code changes, builds results, etc.

• Extensions - Added functionalities for the dashboard.

Image Source: AWS CodeStar’s Automated Continuous Delivery Pipeline, https://aws.amazon.com/codestar/features/

AWS CloudFormation

AWS CloudFormation is one of the most important MLOps services AWS offers because it solves one of the biggest potential issues of cloud computing. It manages the different services by simplifying the process, saving time that would otherwise be spent dealing with various infrastructure AWS management problems. The whole stack of AWS resources can be defined with a template or with a text file. Those templates are then used to deploy various resources. They can be modified to be reusable. The standard template offered by CloudFormation is one that deploys an S3 bucket, an AWS Lambda function, an Amazon API, a Gateway API, an AWS CodePipeline project, and an AWS CodeBuild project. A typical process can be seen in the image below.

Image Source: AWS CloudFormation Process, https://aws.amazon.com/cloudformation/

Amazon S3

Amazon S3 is short for Amazon Simple Storage Service. Amazon S3 is an object storage service that provides a scalable and secure method of storing data on the internet without making compromises in terms of data availability. S3 works by using basic data containers called buckets. Each of these buckets can contain as much data as the user wants. The data is uploaded in the form of objects. However, each of those objects can only hold up to 5 TB of data. The owner can grant or deny access to the bucket for other users, with a simple process of specifying who can and cannot upload and download data. Many authentication mechanisms on AWS S3 make sure that only users with the necessary permissions can access the data.

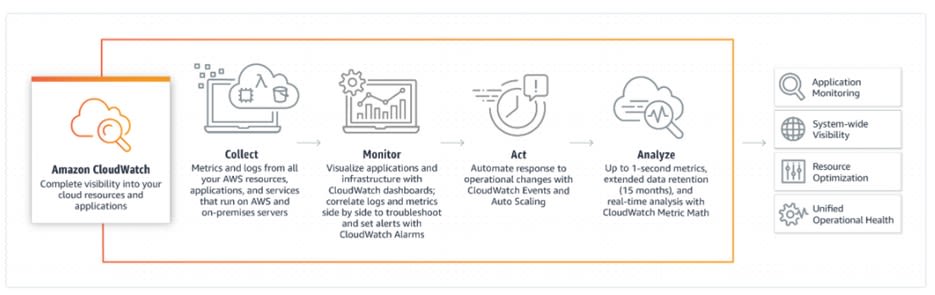

AWS CloudWatch

AWS CloudWatch is a monitoring service that Amazon provides. It has many different uses, some of which are:

• Detecting anomalous behavior

• Setting alarms

• Creating visuals of logs and metrics

• Troubleshooting

CloudWatch is relatively simple to use. It is meant to be used by DevOps and Software engineers. Users can have a unified view of everything CloudWatch is monitoring with intuitive automated dashboards. CloudWatch has some other added benefits:

• It doesn’t require the user to set up an infrastructure.

• The user doesn't need to worry about maintenance.

• It is scalable.

Image Source: AWS CloudWatch,https://aws.amazon.com/cloudwatch/

Conclusion

In addition to being one of the most available and most popular cloud platform services, AWS also offers different services that make managing the entire machine learning lifecycle easier. Companies that couldn't support the infrastructure needed to venture into the territory of MLOps now have affordable options at their disposal with AWS. In this article, we introduced the AWS services commonly used for MLOps. In the next series of articles, we will cover each of the previously mentioned services in more detail, starting with SageMaker. All theoretical concepts will be supported by code examples. The goal of this series is to give a detailed introduction to the different services and to prepare readers to use them.