Table of Contents

Automation is the independent processing of workflow with no human supervision. Many enterprises have already started to leverage automation and are seeing some of its most important benefits, including increased cost, time, and workflow efficiency; assured consistency and accuracy; and reduced employee turnover. While workers may fear that they'll be replaced by automation, that's just a myth.

In reality, automation can free up your employees to apply their expertise more efficiently. In this article, I’ll go through a real-world scenario of task automation for an insurance company that met with many challenges along the way. At the end, you’ll hopefully have a better understanding of what it takes to bring automation into a business.

Chances are you may already have a system in place within your company to calculate metrics or answer questions that are of interest to you. While companies often have systems that can generate useful data, sometimes these systems may be too simple to keep up with a changing industry or changing processes. Machine learning can bring your company a type of automation that reacts to change, and you can use it to improve on your existing system.

- AI Adoption During COVID-19

- Machine Learning Styles: Most Common Types of Machine Learning and When to Use Them

- What Questions Can Machine Learning Help You Answer?

Case Study of Machine Learning for Tasks

Let’s take a look at an automation task at a large, well-established insurance company.

Article continues below

Want to learn more? Check out some of our courses:

What Was the Problem Machine Learning Can Solve?

Throughout its long history, the insurance company in this example acquired many other companies and accumulated plenty of data about their customers along the way. However, after the acquisitions, the customer data remained dispersed across several different database systems and locales. To complicate matters further, the data had no consistent format and was often incomplete. The company’s data science team regularly found data about a given customer spread among multiple places without a global key to link the records together.

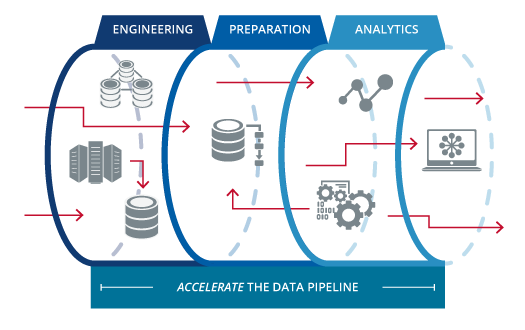

To fix their disjointed data, the data science team had two major problems to solve: First, they needed to build appropriate pipelines to pull their data together into a single location. Second, they needed to build a predictive model to combine all the records for a single person under a single profile. As customer data was updated on a daily basis at the company, the team also needed a record combination algorithm that can run at least every night.

- Pitfalls in the Data Science Process: What Could Go Wrong and How to Fix It

- Machine Learning Automation: The Most Popular "Machine Learning as a Service" Tools in 2021

What Was the Previous Solution Without Machine Learning?

In the past, the data science team tried to solve these issues by using off-the-shelf software like Informatica to create pipelines from subsets of data, which would then feed into a simple model to link the records. Completing this process took 8 hours every night. Although this solution worked for a while, the data pipelines and the linking model needed an upgrade.

What Was the New Solution With Machine Learning and End Results

The team decided to upgrade to Apache Spark’s Dataframe API to build their new data pipelines across the company’s records. Then, they made an algorithm to create a “blocking key” for each of the records to use as a unique identifier and to help the system correctly assign data to an individual record. Each blocking key was made up of a record’s first letter of the first name, first letter of the last name, and the first digit of the zip code. However, after running the system and finding many false positives, the team quickly discovered the blocking keys weren’t unique enough because many records could produce the same blocking key.

The team then ran a logistic regression algorithm on pairs of similar records (records with a phonetic similarity in name and identical state and zip) that also had an associated social security number. After running the algorithm, transitive links between records were solved using the Python library graphframes. An artificial neural network (ANN) model was tested for the same linking problem. Results from the ANN model showed a marginal gain in recall (true positives identified), but also led to a loss in precision.

The team ultimately found that the ANN model did not bring any benefits to data processing over the logistic regression. However, the team did see benefits elsewhere. Using the ANN model reduced the automation time to 1.5 hours nightly, instead of the previous 8 hours. The code was also simplified and became easier to maintain. In the end, the company decided to keep going with the artificial neural network model.

What Were the Challenges of Automating Tasks With Machine Learning?

So, what were the challenges that the data team faced in this automation task?

- Poor-Quality Training Data. An investment in building a higher-quality training dataset would have been appropriate in this case. Instead, the team lost valuable time trying to create a neural network using data they already knew had problems.

- Wasted Time. The team spent too much time trying to find a better algorithm, even though all of the algorithms they had tried so far had performed poorly. The team ended up taking nine months to fix their original problem, while they may have had better results by investing in improving the quality of the data first, such as through outsourced manual validation and tagging.

- Too Much Risk. The Spark Dataframe API was cutting edge technology at the time. The team may have benefitted from using a more well-established and well-understood machine learning-based tool for their first automation task.

- Lack of Focus. The team lost focus by trying to fix both of their problems (creating data pipelines and combining data from different locations into a single user record) simultaneously. Had they approached one problem at a time, they may have been able to realize an optimal solution faster.

Hopefully this article gives you a good idea of how a real-life machine learning project looks and the challenges that may come with it. Worldwide, companies are adopting automation solutions for efficient business operations. Regardless of the type or size of your company, automation can be a winning strategy to help you and your employees do more with less.

![[Future of Work Ep. 1] Future of Fashion: Using Data to Predict What Will Sell with Julie Evans](https://res.cloudinary.com/edlitera/image/upload/ar_16:9,c_fill,f_auto,q_auto,w_100/56ar4tdmijwmrgnhoq4f56kcoddw?_a=BACADKBn)