AI has steadily gained popularity over the years. However, few innovations have caused as much disruption as the introduction of Large Language Models (LLMs). These models have rapidly transformed various sectors, including those with little previous interest in AI. New use cases emerge almost daily. Despite their impressive capabilities, LLMs remain subject to notable limitations.

Even the most advanced LLMs operate within a limited scope. They are confined by the data on which they were trained and lack access to real-time information or external tools needed for complex, dynamic tasks. Once these limitations became apparent, researchers began developing workarounds to enhance LLM capabilities.

Shortly after, RAGs (Retrieval-Augmented Generation systems) were introduced. These systems connect an LLM to a database, allowing it to access more recent information and overcome data constraints. However, the true revolution came with the introduction of AI agents.

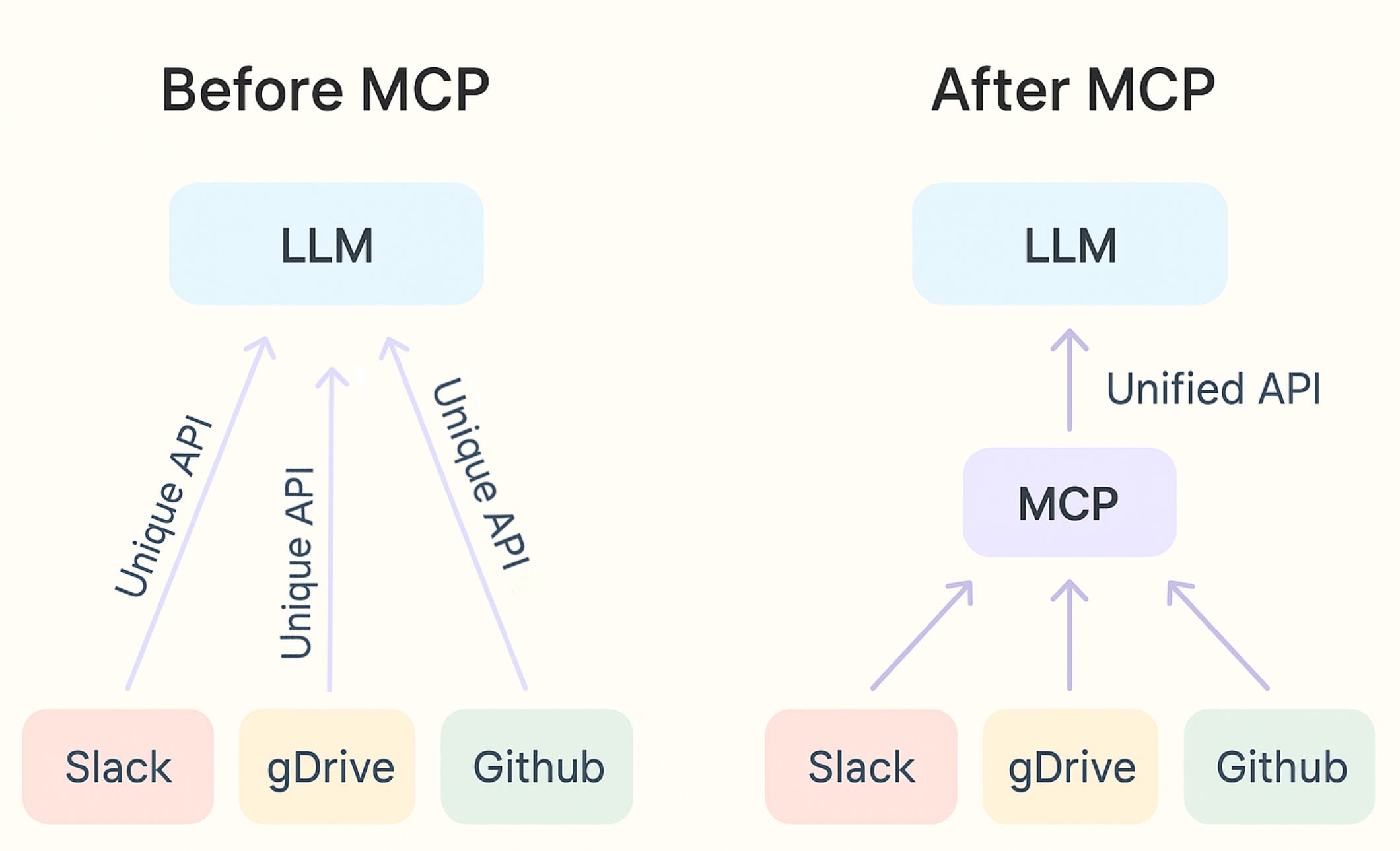

AI agents are complete systems that connect an LLM with a variety of tools, enabling it to solve more complex tasks. For example, when an LLM is connected to a tool like Slack, it can automate message-sending while leveraging its language capabilities. However, this approach also presents limitations. The primary limitation is that different tools have different APIs.

A recently introduced solution to this problem is the Model Context Protocol, which will be covered in this article.

What is the Model Context Protocol (MCP) and Why Was It Introduced

The Model Context Protocol (MCP) is an open standard introduced by Anthropic, the creators of the renowned Claude LLMs. It serves as a method for connecting LLMs to external tools in a more standardized manner. You can think of MCP as a standardized “plug-and-play“ interface between AI models and external resources.

Before MCP, integrating an AI model with each external tool or data source required a custom integration. Each new connection, whether to a file system, database, API, or other resource, necessitated the development of a unique adapter. This approach was fragile and difficult to scale.

MCP significantly simplifies the entire system by providing a single, unified standard through which AI can access information and perform actions. Its design aims to make AI assistants not only intelligent but also contextually aware and capable of interacting with their environment.

Article continues below

Want to learn more? Check out some of our courses:

Key Components: How Do the Pieces Fit Together

MCP is built on a client-host-server model. This model standardizes communication while maintaining security. The important components of MCP are:

- Host Process

- Client Instances

- MCP Servers

The host process is the center of the MCP setup. Essentially, it is the core application where the AI capabilities reside. This could be an AI dashboard, a smart email client, a CRM system, etc.

It isn’t the AI model itself, but rather the platform that manages, coordinates, and secures the AI’s interactions with the outside world via MCP. In layman’s terms, it is the AI application that you see.

The host process manages multiple AI clients. It serves as the primary security layer, gathers and prepares relevant information from various sources, and compiles it for the AI model. It also orchestrates the overall workflow, deciding which AI component should interact with which external system's MCP Server based on the current task or user command.

Living within the host process are the client instances. These are tiny connectors that allow the AI to “speak“ the MCP standard and interact with external systems. Each instance represents a dedicated connection or session. It is often associated with a particular AI task or a user's ongoing interaction. The primary jobs of client instances are:

- Capability Negotiation – When a client connects to an MCP server, an initial exchange occurs. This helps both parties understand each other's capabilities and determine the version of the MCP protocol for communication.

- Message Orchestration – The client manages communication flow between the AI in the host and the MCP server. It formats requests according to the MCP standard and processes incoming responses.

- Maintaining Boundaries – The client enforces security boundaries within the Host application. It ensures that data and access for one AI task or user session remain isolated from others.

MCP servers act as links that connect the AI application, running within the host using Client instances, to various external data sources, tools, and services that businesses use daily. These servers are highly lightweight, designed specifically to implement the MCP standard for a particular external system. They receive standardized MCP requests from the AI client and convert them into native API calls, database queries or other specific commands understood by the external system. Once the external system processes the request, the result is translated back into the standard MCP response format that the AI client can understand.

Servers can offer the following:

- Resources

- Tools

- Prompt Templates

Resources represent any type of data that an MCP server can provide to the AI model. These are primarily read-only, meaning the AI can read or retrieve information from them but cannot modify the source data itself. Examples include customer contact details, project status updates, specific records, reports, or any other type of document.

Tools are, simply put, callable functions with side effects. They enable the AI to perform actions using external systems. These tools are specific operations that an MCP server exposes. The AI model can choose to use them based on its understanding of the task and the available options.

Tools allow the AI to take actions according to the context and the information it has. This enables the AI to complete tasks successfully. Examples include sending an email or message, creating or updating tasks in a project management system, generating reports from a database, or even triggering entire pre-built workflows.

In short, tools elevate agents from simply providing information to being active participants in business processes.

At the end of the day, the AI models that serve as the brain of our AI agent systems still need us to provide a prompt. While the AI could, in theory, decide to use a particular resource or tool, we typically get better results if we guide it. By telling the AI which resources to reference and which tools to use, we help it solve problems more effectively.

To streamline the process, servers can provide prompt templates for common multi-step tasks. These templates involve the agent using a particular set of resources and tools. They act as shortcuts for curated interaction patterns provided by the external system's MCP Server. This makes it easier for both users and AI to leverage available capabilities.

How Does MCP Work in Action Through a Typical Workflow

For some, the previously explained concepts might seem abstract. It is quite easy to understand how MCP works when we look at a typical workflow example. This standardized workflow is a key aspect of MCP's power. It enables consistent interaction, regardless of the specific external system involved.

First, the host application launches and establishes a connection with the corresponding MCP server. For example, a specialized enterprise tool connects with an MCP server designed to interface with it, such as a project management tool.

Next, the client and the server perform a brief "handshake" to confirm compatibility in terms of the MCP protocol version. During this process, the server sends the client a list of available resources and tools. Each resource and tool comes with a detailed description. This step allows the AI to understand what actions it can perform within the connected system.

Afterwards, the user inputs a prompt to interact with the AI within the host application. This can involve typing into a chat interface, clicking a button, or taking any similar action. Alternatively, an automated process within the Host application might determine that AI interaction is required.

The AI model, powered by the Host application and now aware of the available capabilities through the earlier handshake, analyzes the user's request or task. It understands the intent and, based on this, determines which specific capabilities to invoke from the connected MCP Server(s).

The MCP server receives the standardized request. It then performs the required action with the external system it represents. This could involve querying a database, calling a third-party API, accessing a file, or executing a specific function within the external application. Once the action is complete, the server packages the result into a standardized MCP response format and returns it to the AI client.

The AI client forwards the response it received back to the host. The host makes this new information available to the AI model. The AI then integrates this new data, or the outcome of the action, into its current context. It can now use this real-time information to formulate a complete, accurate, and contextually relevant final response to the user. It can also decide on the next logical step in a multi-part task.

Throughout this process, the communication channel between the Client and Server remains active, allowing for continued interaction. This interaction includes sending progress updates, reporting errors, and handling other related tasks. In more advanced scenarios, the server may be configured to ask the AI a clarifying question or request the AI to perform a sub-task based on the data or situation it encounters.

The Model Context Protocol marks a significant advancement in unlocking the full potential of AI agents. It provides a crucial, standardized layer that enables seamless integration with real-world data and tools. Developers are embracing it eagerly because it solves fundamental integration challenges. By offering one standard instead of countless custom adapters, being model-agnostic, and allowing for swappable components, MCP enables tools to be added or changed without disrupting the core AI model.

MCP is already being adopted in real-world applications. Giants like Anthropic are integrating it into products such as Claude Desktop, providing ready-made connectors for major services like Google Drive, Slack, and various databases. OpenAI has added MCP support to its experimental Agents SDK, enabling GPT-4 agents to leverage MCP servers. Leading coding tools and numerous companies are employing MCP to connect AI assistants to complex internal systems and codebases. Meanwhile, a vibrant community is rapidly building hundreds of servers for diverse applications, ranging from payment systems like Stripe to IoT devices.

Given the points discussed earlier, it is clear that MCP is poised to be the key protocol for building the next generation of intelligent applications powered by AI models. In the future, embracing MCP will be crucial for any organization aiming to create advanced AI solutions capable of effectively interacting with the digital world.