Table of Contents

Last year COVID-19 took the world by surprise, and together we all learned how a pandemic can influence businesses and everyday life. However, as with most problems, we also learned how to adapt.

The most common adaptation during COVID-19 was the rise of working from home. Although the concept of working remotely was around before the pandemic, working from home was mostly practiced by programmers or other professionals in similar roles. The pandemic showed us that a lot of work can be done remotely. Living in one country and working in another is now relatively common and, with time, the term “work from home” may even be replaced with a new idea—“work from anywhere.”

A change in workplace location was not the only adaptation caused by the pandemic. In 2020, many breakthroughs in scientific fields went unnoticed while companies struggled to stay relevant in the wake of a shifting work culture. Many of these breakthroughs were accomplished using another game-changing workplace innovation called artificial intelligence, or AI.

In a world struck by a pandemic, nobody can blame those outside of the scientific community for not being aware (or excited) for the many changes brought about by AI last year. However, many companies have adopted some form of machine learning and/or deep learning into their processes with the hope to make businesses more competitive in the global market.

- How to Start Using Machine Learning and Artificial Intelligence: Tips for Business Leaders

- AI for Leaders: A Practical, No-Code Intro to Machine Learning and Deep Learning

Talking about all of the accomplishments and breakthroughs in the field of AI in 2020 would require many articles, even if I focused on only the most important ones. So in this article, I'll skip commonly talked-about AI topics.

While it might seem blasphemous to those in the AI community not to mention models such as the Generative Pre-trained Transformer 3, or GPT-3, these topics have already gotten enough attention. (Disclaimer: Generating human-like text is no small feat! But, I'd like to shift the spotlight from Natural Language Processing (NLP) models, at least for a bit.)

In this article, let's for a moment ignore AI that is trying to imitate something you can already do, and focus on AI that achieves what you thought before was impossible.

What is the Artificial Intelligence Paradox of Solving Paradoxes?

A paradox can be described as a logically self-contradictory statement, and most paradoxes are better defined by use of a thought experiment. Some of the most intriguing paradoxes have been popularized by media. Maybe you've heard of the time travel paradoxes that consider the potential cause and effect of traveling back in time —such as the Bootstrap Paradox (getting stuck in an infinite cause and effect loop) and the Grandfather Paradox (if you go back in time and kill your grandfather, can you exist in present day?)—that have been explored in many books, movies, and television series.

Some paradoxes have even become common knowledge because of how often they're referenced, not only by media but also in schools. The Ship of Theseus (if a ship has all of its parts replaced, does the same ship exist at the end?) and Hilbert's Infinite Hotel (can a fully occupied hotel with an infinite number of rooms accommodate an infinite number of guests?) are just two more examples. Others, such as Maxwell's Demon (a thought experiment exploring the second law of thermodynamics) are mostly known and discussed by individuals working in particular scientific fields.

So what do paradoxes have to do with the role of artificial intelligence?

Hidden among the problems brought about by the pandemic, several scientific breakthroughs happened in 2020. Many of those breakthroughs had one thing in common: in one way or another, AI found solutions to what were previously thought to be unsolvable—or paradoxical— problems.

A paradox by definition doesn't seem to have a viable solution, so what happens when a solution is actually found? Does the paradox remain a paradox? Last year, Google's AI, known as AlphaFold, caused many to wonder that same question when it found the solution to a fifty-year-old paradox. More specifically, in 2020 AlphaFold solved what is known as Levinthal's Paradox.

Article continues below

Want to learn more? Check out some of our courses:

Levinthal's Paradox

Levinthal's Paradox is a famous thought experiment designed in 1969 by Cyrus Levinthal. It's also called the “protein folding problem,” as the unsolvable problem in Levinthal's Paradox is the perceived impossibility of accurately predicting the structure of a protein.

For background, the unique 3D structure of a particular protein heavily influences how that protein behaves, and an unfolded polypeptide chain has a whopping 10300 estimated possible conformations. To put that number into perspective: if a protein were to sequentially sample all possible conformations until it found the “right” one, it would need more time than the age of the universe to find its correct state, as small proteins can fold spontaneously in a matter of milliseconds or microseconds.

Therefore, to Levinthal, predicting what the structure of a protein will look like seemed like an impossible challenge:

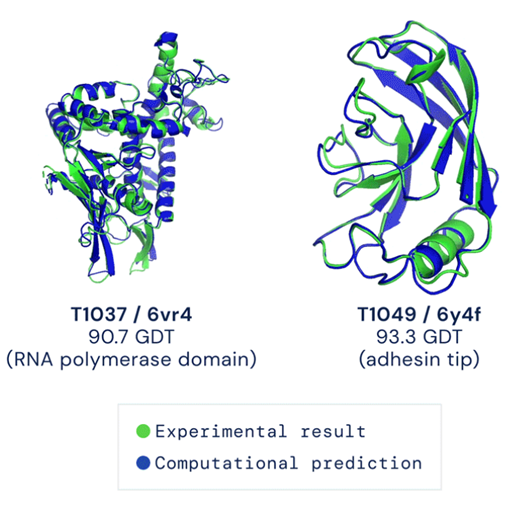

Image Source: Deep Mind, www.deepmind.com

Levinthal's “impossible challenge” was solved last year by AlphaFold. To be more specific, an organization called CASP, short for Critical Assessment of Techniques for Protein Structure Prediction, defined a metric called a global distance test (GDT) that describes how close a prediction is to the actual state of a folded protein. Along with that metric, a goal was also defined: any GDT score above 90 would be considered a valid solution to the protein folding problem. AlphaFold 2, a second iteration of the AlphaFold AI, achieved a median score of 92.4 GDT, suggesting, in fact, that Levinthal's paradox might not be a paradox after all.

Schrödinger’s Cat

If protein folding isn't your “thing”, another example of AI's value to paradoxical problems that has piqued the interest of many is the paradox called Schrödinger’s Cat.

Schrödinger’s Cat is one of the most famous paradoxes to ever exist. In fact, most people have heard of it, even if they aren't well-versed in quantum mechanics. The Schrödinger’s Cat experiment tries to demonstrate a recurrent problem in quantum mechanics, which is the ability of macroscopic objects to exist simultaneously in more than one completely distinct state.

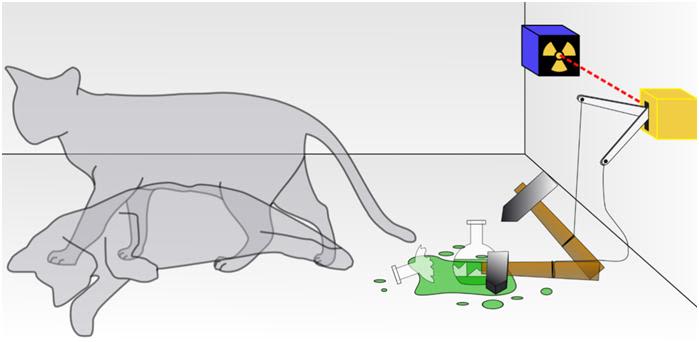

As often happens with paradoxes, a corresponding thought experiment was designed to make the problem easier to understand. To paraphrase, the thought experiment is as follows:

Imagine constructing a steel opaque box and putting a cat inside it, along with a device. The cat must not be able to tamper with the device. The device should contain a Geiger counter (an instrument for detecting and measuring radiation), a very small amount of a radioactive substance, a hammer, and a flask of hydrocyanic acid. The amount of radioactive substance inside the device should be so small that there is an equal chance of an atom decaying or not decaying. If an atom decays, the hammer breaks the vial of hydrocyanic acid and poisons the cat, killing it. To conduct the experiment is to leave the system alone for an hour. During this period an atom will either decay—and the cat will die—or an atom will not decay and the cat will live.

Image Source: Schrödinger’s Cat Experiment, https://en.wikipedia.org/wiki/Schr%C3%B6dinger%27s_cat

The idea behind the Schrödinger’s Cat experiment is that you, as an observer who can't see inside the box, don't have any way of knowing whether the cat is dead or alive. Therefore, the cat can be thought of as both alive and dead at the same time. This problem was further defined in Schrödinger's equation, and solving Schrödinger's equation has been the goal of many researchers throughout the years. As was the case with Levinthal's Paradox, it seems that in 2020 AI has finally managed to do it.

More specifically, scientists at Freie Universität Berlin developed a computing system called a neural network that can solve Schrödinger's equation without using approximations (which was up until then the main method of approaching it). By implementing a concept called “Pauli's exclusion principle” into their neural network, these scientists not only accurately solve the equation to a degree never seen before, but also managed to do so within reasonable computational costs. Their solution ultimately gave the network its name, “PauliNet,” and proved once again the abilities of AI to challenge our definition of the word “paradox.”

What Are the Limitations of Modern AI?

Even though I've so far sung high praises of the ability of deep learning systems to solve problems humans cannot, AI is not all-knowing, and language is not the only area where AI lags behind human capabilities. In fact, AI has its paradoxes as well.

One of the most famous is Moravec's Paradox, which demonstrates the counterintuitive way AI models acquire knowledge. Moravec himself says that "it is comparatively easy to make computers exhibit adult-level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility."

Moravec attributes the difference in skills between humans and AI to evolution. The oldest of human skills, including motor and perceptive skills, are effortless to us today, which makes them difficult to reverse-engineer. On the other hand, even with thousands of years of evolution behind us, some skills, such as advanced mathematics, engineering, logic, and scientific reasoning, are still hard for us because we didn't require them in the past. In other words, it isn't what our brains and bodies were primarily designed to do.

Moravec isn't the only person to discuss the limits of modern AI, also known as “learnability limbo.” While solving Levinthal's Paradox or Schrödinger's equation may lead you to believe there are no limits to what AI can learn, that conclusion doesn't really hold true. The logical paradox defined by Kurt Gödel best explains the real situation.

Kurt Gödel did not specifically have AI in mind when he worked on proving the continuum hypothesis, and neither did Paul Cohen when he complemented Gödel's work in the second half of the twentieth century. To explain simply, the continuum hypothesis and its logical paradox invalidates the idea that AI can solve any problem, because computer algorithms use principles of mathematics and statistics to “learn.” Just as with humans using these principles, the same limitations that apply to standard mathematics and statistics therefore also apply to, and limit, AI.

The limitations of AI have been topics of debate for as long as AI has existed, but the truth is that it is impossible to know what these limitations really are. With the examples explored in this article, the potential of AI can seem at once both infinite and limited, depending on its field of use.

- Human vs. AI: Reasons Why AI Won't (Probably) Take Your Job

- [Future of Work Ep. 4]: The Future of Sales with Santosh Sharan: Is AI Coming for Sales Jobs?

Some believe the paradoxical intelligence of AI is best described when viewed through an experiment that was designed a long time ago by Alan Turing. Turing is often called the father of artificial intelligence, and his test proposed a way to finally prove that AI can be as intelligent as humans.

The Turing Test and Eugene Gootsman: How AI Can Be as Intelligent and Limited as Humans

The Turing test itself is very simple: A human is isolated in a room with just a computer. That human then communicates, using text, with both a computer and another human. However, they are not told who they're going to talk with first. If the computer can successfully fool the evaluator 30% of the time into thinking that they're speaking with a human, the computer passes the test. While people still doubt that AI can reach a level of intelligence to fool a human, many researchers believe that AI has already passed the Turing test.

An AI named Eugene Goostman took the Turing test in 2014 and successfully tricked 33% of the judges at the Royal Society in London into believing that it was human. However, the conversations that Eugene Goostman led with humans were only five minutes long. Due to their short durations, some researchers dispute the passing results.

While whether Eugene Goostman passed the Turing test has become a matter of opinion instead of a fact, there is another trend that has surfaced in the last few years that may indicate AI is closer to human intelligence than ever before, and that is that many scientific breakthroughs are no longer attributed to humans.

Just by reading the headlines of articles connected to the solution of the Levinthal's and Schrödinger's Cat paradoxes, you can easily notice that the breakthroughs are attributed to the AI that solved the problem, and not to humans. What you won't see is a headline like “Researchers at Google solve a fifty-year-old problem,” or “Scientists at Freie Universität Berlin solve Schrödinger's equation.” The popularity of articles that mention AI may contribute to this phenomenon, but it still demonstrates that a lot of modern breakthroughs are starting to be attributed to AI instead of humans. While some might believe article titles are irrelevant, you have to wonder: When will humans be pushed out of the equation? In 2018, Google released AutoML, an AI that can build new AI.

We say that AI is paradoxically intelligent because of its limitations, but do those limitations really exist? Or, should we consider that we have just not yet created an AI that is advanced enough to outperform us—even in areas where we believe we are superior? We can be prideful and say that humans don't need 60,000 images of numbers from 0 to 9 to be able to successfully recognize those numbers—which is how AI learns—but limitations such as those are slowly starting to fade away.

An AI learning method called “‘less than one’- shot learning,” or LO-shot learning, was introduced relatively recently. Using LO-shot learning, an AI was able to learn how to recognize ten different numbers by only looking at images of five different numbers.

This is a staggering development and raises a very important question: Could you do something similar? If somebody was to give you images of five different animals and later ask you to classify a test set of animal images into ten different animal classes, could you do it? At the moment, you can still claim there is a paradox behind how intelligent AI really is and that certain limits will never be broken. But AI has proven time and time again that it can dismantle paradoxes and find solutions to impossible problems.

I believe that sometime in the future there is a high chance that AI will find a way to deal with the paradox that currently defines the limits of its intelligence. And when it does, it will also find a way to surprise us once again.

![[Future of Work Ep. 1] Future of Fashion: Using Data to Predict What Will Sell with Julie Evans](https://res.cloudinary.com/edlitera/image/upload/ar_16:9,c_fill,f_auto,q_auto,w_100/56ar4tdmijwmrgnhoq4f56kcoddw?_a=BACADKBn)