If you are building an AI application that uses large language models (LLMs) in a retrieval-augmented generation (RAG) setup, you have likely encountered the term "vector database". It is a concept that comes up again and again in this space. Choosing a vector database has therefore shifted from a matter of technical curiosity to a critical infrastructure decision.

The landscape, however, can be confusing. Terminology is often overloaded, and cost implications are not always obvious upfront. The choice is not as simple as picking the "fastest" or "cheapest" database. It requires understanding fundamentally different philosophies for solving the same problem, and recognizing which trade-offs align with your specific needs.

In this article, we will examine three leading contenders: Pinecone, Weaviate, and Qdrant.

What Different Paths Lead to the Same Destination

At a basic level, all three databases address the same core problem: performing similarity searches over high-dimensional vectors. These vectors are mathematical representations of various types of data. The data can include text, images, or any other information that has been transformed into vectors using an embedding model. Despite solving the same problem, each of these databases takes a remarkably different approach.

Pinecone is the "easy" option. It is a fully managed cloud service designed to handle everything for you. There is no need for you to pick servers, configure complex settings, or manage clusters. Because of this, Pinecone positions itself as the "just works" option for teams that prioritize speed over control.

Weaviate is more like a traditional database with extra features. It is open-source and built around organizing your data with schemas. Weaviate does more than just store vectors. It stores complete objects with properties. It also maintains text indexes for keyword search and even allows query with GraphQL. Essentially, it is designed to serve as your "main database", rather than just another tool in your infrastructure.

Qdrant is built for performance-critical applications and is designed for engineers who prioritize speed. It is written in Rust, a systems programming language known for its memory safety and high performance. Qdrant provides the essential tools and lets you build exactly what you need. This flexibility makes Qdrant not only a fast tool but also an efficient one.

What Design Choices Make or Break a Vector Database

The way these systems are built reveals what each one prioritizes, and these design choices impact everything. Overall, the design of each database clearly reflects its target audience. While they operate in the same space, they are designed to serve different types of users.

What Is Pinecone's Serverless Architecture

Pinecone's design is simple on the surface. You create an index, an equivalent of a folder of vectors, set the dimension and similarity metric, and then start adding vectors. Behind the scenes, Pinecone manages everything for you, including scaling. Your data lives in object storage, such as S3 buckets, while the search indexes are temporary computing resources built on top. This approach enables Pinecone to easily add more copies or even switch between different pod types. However, there is a catch: you pay per action. As a result, costs can grow quickly with larger datasets or frequent searches.

What Is Weaviate's Schema-First Philosophy

Weaviate's design prioritizes structure. In Weaviate, you create classes, which means defining a complete data structure with typed fields, relationships, and indexes, rather than just creating a bucket of vectors. This approach provides much more flexibility and enables hybrid searches, which combine vector and keyword search, along with other types of complex filtering. While this requires careful planning of your data model from the start, it also makes Weaviate an ideal candidate for serving as your main database, rather than just a search tool.

What Is Qdrant's Performance-Optimized Design

Qdrant's design is quite straightforward. Collections hold points, and points contain vectors along with extra data called payloads. You can then create indexes on these payload fields. What makes Qdrant particularly fast is its implementation of filterable HNSW. HNSW is a graph-based algorithm for approximate nearest neighbor search. Qdrant has modified it to include filters directly in the search process. This allows you to apply aggressive filtering without slowing down, which is crucial when searching millions of vectors while filtering by user ID, date, or any other field.

Article continues below

Want to learn more? Check out some of our courses:

How Do They Manage Hybrid Search

Hybrid search is a significant technique for RAGs. It enables them to find relevant information by measuring the similarity between embeddings created for your query and those generated from your database. At the same time, it can also perform standard keyword searches.

How Does Weaviate's Built-In BM25 Approach Work

Weaviate's approach to hybrid search is particularly straightforward. It has a built-in inverted index for text, similar to Elasticsearch. When you run a hybrid query, Weaviate performs both a BM25 keyword search and a vector search simultaneously. It then combines the scores, prioritizing one over the other based on user specifications, and returns the results. This works out of the box because Weaviate treats the original text as equally important as the embeddings created from it.

How Does Pinecone's Sparse Vector Method Work

Pinecone's solution requires slightly more setup. It supports sparse vectors, which are high-dimensional vectors that consist mostly of zeros, alongside regular dense vectors. These sparse vectors are created using an encoder, typically Pinecone's own.

When a user searches for a particular term, Pinecone first compares the dense vectors and then the sparse vectors. Afterward, it combines the scores before returning the results. Essentially, instead of using BM25, which is the standard method for keyword search, Pinecone uses sparse vectors for keyword search.

While the entire process, including encoding, is managed for you, there is a cost implication. Pinecone treats these sparse vectors as additional data, for which it will charge you.

How Does Qdrant's Flexible Multi-Vector Approach Work

Finally, Qdrant takes a middle path. It supports multiple vectors per point, allowing users to store both regular and sparse representations together. This provides greater flexibility, but the process can be more involved for beginners. Users need to manage text processing, including word splitting and extraction, separately.

In this category, Weaviate stands out for its simple and effective approach. It is safe to say that it delivers what most users expect when performing a hybrid search in a RAG system.

How Fast Are Pinecone, Weaviate, and Qdrant

When discussing performance, we are really referring to three connected aspects. The first is how fast responses are, also known as latency. The second is how many queries the system can handle, which is called throughput. The third is how efficiently it uses resources.

Performance can vary significantly based on configuration, dataset size, and query patterns. According to Qdrant's benchmarks from January 2024, Qdrant showed strong performance in specific configurations, with response times that can be much faster than alternatives. Weaviate's performance depends heavily on your configuration choices. Pinecone, being a cloud-based service, includes network overhead in its response times.

To minimize latency and maximize throughput, Qdrant is worth examining closely. It not only has the potential for low latency, but it also offers high throughput when properly configured.

However, if you plan to use Qdrant, or even Weaviate, be prepared to do some extra work. While Pinecone automatically handles increased loads, Weaviate and Qdrant require you to add more servers or copies. For technical teams, this should not be too difficult to manage.

How Expensive Are Pinecone, Weaviate, and Qdrant

While performance is highly important, budget constraints often play a significant role. In this regard, the differences between the three databases become even more pronounced. Monthly costs can vary significantly. For a typical production scenario of 5 million vectors at 768 dimensions, handling 200 queries with backup copies, the monthly expenses can differ dramatically.

What Is the Cost of Pinecone's Usage-Based Model

Pinecone uses a pay-as-you-go model, which can lead to surprising bills if you are not careful. Storage is relatively cheap, at about $5 per month for 15GB of data. Search costs, however, tell a different story. Each search uses "Read Units" (RUs) based on the amount of data being queried.

With proper optimization, such as using namespaces, partitioning, and filtering, typical production workloads cost between $500 and $2,000 per month for moderate usage. Costs can rise significantly if queries are inefficient and search through all your data. The key is understanding that Pinecone rewards optimization. While it markets itself as a platform that handles everything for you, there is still considerable setup required to keep costs reasonable.

What Is the Cost of Weaviate's Infrastructure

Weaviate uses a completely different pricing model. You do not pay for searches, only for the data you store and the infrastructure you need. For example, those same 5 million vectors at 768 dimensions cost about $730 per month on their Standard plan with backup copies. As long as your servers can handle the workload, you can run millions of searches at no extra cost. Essentially, you are paying for infrastructure capacity, not per operation. For larger deployments with more resources, costs can reach up to $4,000 per month.

What Is the Cost of Qdrant's Resource-Based Model

When working with Qdrant, you pay for the computing power and memory you reserve, regardless of whether you use all of it. For a similar workload, a three-server cluster with good specifications costs approximately $3,000 per month on their managed service. Hosting Qdrant yourself can reduce these costs further, to between $600 and $700 per month. However, this approach requires significantly more work on your part.

What Are the Hidden Operational Costs

There are also some hidden costs to consider, mostly related to the amount of work required to run these databases in daily operations. Pinecone's fully managed approach means you rely entirely on their infrastructure. Updates happen automatically, scaling is handled for you, and monitoring is provided through their dashboard. This setup is ideal for small teams, but it can feel limiting for companies with specific compliance needs or those that need detailed control.

Weaviate and Qdrant allow you to start with their managed services and transition to self-hosting as you grow. However, this flexibility comes with added responsibility. To be more precise, if you plan to use one of these two databases, you will need someone who understands distributed systems, can debug performance issues, and can plan capacity effectively.

What Are the Recent Developments in Vector Databases

The vector database landscape has evolved rapidly from 2023 to 2025. Pinecone introduced serverless indexes to help reduce costs for variable workloads. Weaviate added multi-tenancy features and improved its hybrid search capabilities. Qdrant expanded its quantization options to reduce memory usage while maintaining accuracy.

The market itself has grown significantly. According to various industry reports, the vector database market is projected to reach several billion dollars by 2028.

Which Vector Database Should You Choose

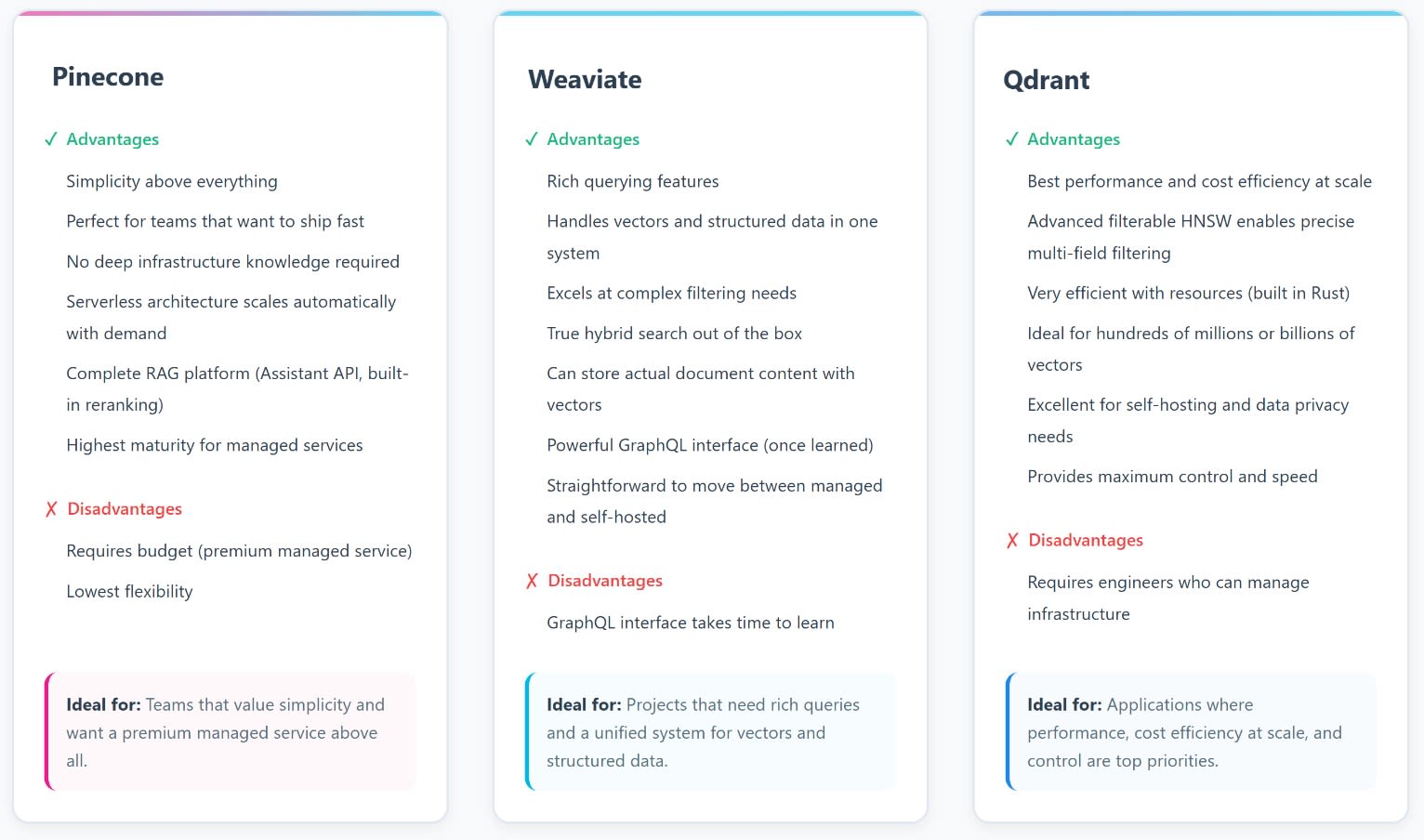

Choosing the right database depends entirely on your needs, as all three are quite different from each other. The image below shows a quick breakdown of their advantages, disadvantages, and ideal use cases.

Vector databases occupy an interesting crossroads. They are part storage system, part search engine, and part machine learning infrastructure. The three we have examined might seem similar on the surface, but they solve the same problem in completely different ways.

Comparing them head-to-head is challenging because they are fundamentally different. Pinecone is essentially a managed service that hides all the complexity. Weaviate is a feature-rich database designed to be an all-in-one solution. Qdrant is a performance-oriented tool that assumes users know what they are doing.

This means that asking for the "best" vector database can be misleading. The real question is: what matters most for your project, and what can your team realistically handle? A solo founder building an MVP has different needs compared to a team of ML engineers at a Series B startup.

But here is what is truly striking: this entire category barely existed before 2020 or 2021. The rapid evolution of vector databases means that the choice you make today does not have to be permanent. New approaches will continue to emerge, and better architectures will be developed. The best solution for your needs in 2027 might not even exist yet.

So, here is my advice. Choose the vector database whose strengths align with your current needs. If you need to ship quickly and lack infrastructure expertise, Pinecone makes sense, even if it is more expensive. If you have a capable team and want more flexibility, Weaviate or Qdrant might be better options. Do not overthink it. Build something, learn from it, and remain flexible enough to switch to a solution better suited to your needs as new options become available.