Table of Contents

Machine learning can offer your business plenty of benefits that unfortunately come hand in hand with plenty of security vulnerabilities. Although you may want your machine learning project to work as fast as possible at the lowest cost, good security can be precisely the opposite - slow and expensive. This article explains how secure machine learning is and provides you with a list of the main risks you should guard against.

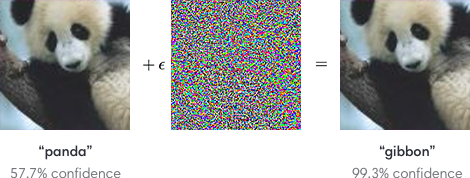

1. How Adversarial Examples Scrambles Data

Adversarial examples are the most encountered attacks that aim to fool your ML system by feeding it with malicious input that includes very small and unnoticeable perturbations. They function like optical illusions for your system, and they can cause it to make false predictions and categorizations.

Image Source: https://openai.com/blog/adversarial-example-research/

Article continues below

Want to learn more? Check out some of our courses:

2. How Data Poisoning Ruins Your Data Models

Data poisoning happens when an attacker manipulates the data fed into your ML system, thus compromising it. Your machine learning engineers should consider your training data and be aware of any weaknesses that could make it prone to an attacker and to what extent that could happen. Attackers can even manipulate raw data used to train models so that even your machine learning training could go bad.

- Artificial Intelligence Roles: Who Does What?

- Automation with Machine Learning: How to Use Machine Learning to Automate a Task

3. How Online System Manipulation Attacks ML Models

Online machine learning systems are the ones that continuously learn during operational use and can modify behavior throughout time. An easy to carry out attack consists of nudging the still-learning system through system input and then retraining the model to do the wrong thing. For this, your machine learning engineers should consider data provenance and algorithm choice very carefully.

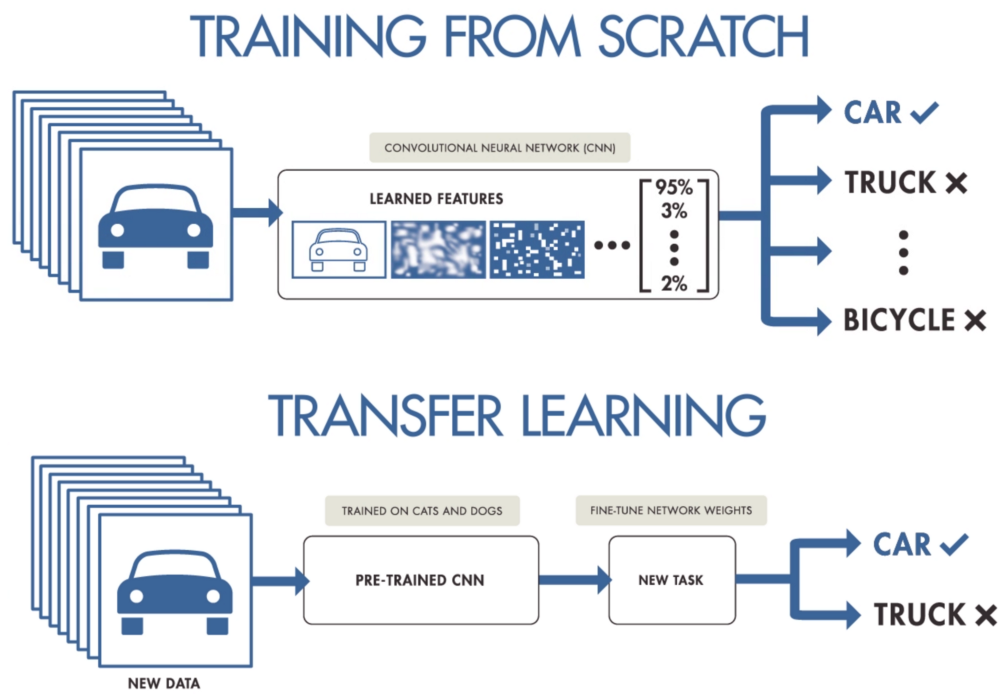

4. How Transfer-Learning Attacks Tuned Models

Machine learning systems are usually made by tuning an already trained-based model. Basically, its generic abilities are fine-tuned with specialized training. If the pre-trained model is widely available, attackers can use it and succeed against your tuned model.

Make sure that your machine learning system used for fine-tuning does not include unanticipated behaviors. There is also a risk when you take models for transfer from groups. If you do so, make sure that there is a description of exactly what their system does and how they control the risks in the document.

Image Source: Transfer Learning vs Traditional ML, https://medium.datadriveninvestor.com/introducing-transfer-learning-as-your-next-engine-to-drive-future-innovations-5e81a15bb567

5. How Data Breeches Cause Issues to Data Models

Machine learning systems often include highly sensitive and confidential data that can be attacked. In this case, sub-symbolic ‘feature’ extraction may be helpful because it can hone adversarial attacks.

So, by now, you hopefully have a good idea about why risk management is an essential part of any data science project. Threats are always there and can range in severity, so be cautious and prepared.