Table of Contents

<< Read previous article in the series: How to Use Transfer Learning to Detect Emotions

The first article in this series, "How Emotional Artificial Intelligence Can Improve Education", covered what emotion AI is. In it, I mentioned that there is a way to apply emotion AI in education: you can find out how interesting a lecture is by analyzing students’ facial expressions. You can interpret that as interest if the students mostly display positive emotions. This means they find the lecture compelling, at least enough to keep paying attention. On the other hand, if the students mainly express negative emotions, they are probably not enjoying the lecture. This means that using emotion AI to improve lectures is just a binary classification problem, in which you classify parts of lectures into two classes, such as interesting and boring.

In the second article in this series, "How to Detect Emotions in Images Using Python" I explained how to detect emotions in images. This is not the only way to identify emotions, but it is probably the simplest. By collecting and annotating a dataset, you can train a model to analyze images and predict which emotion a person in an image is expressing. You can build your own model, which larger organizations that use emotion AI sometimes do, but most machine learning engineers tend to use pre-trained models that they fine-tune to better suit their data. Keep in mind most pre-trained models are trained to predict specific emotions. While knowing the exact emotion is useful, it is not necessary for binary classification problems like the lecture example I provided. Since solving a binary classification problem is much simpler than solving a multiclass classification problem, you will probably have better accuracy than the original model, even if you use pre-trained models. But how do you apply emotion recognition to a real-life situation with real people that aren’t static like in still images but often change their facial expressions?

In this article, I will tell you how to recognize emotion in videos to, for example, figure out how students feel about a lecture.

Article continues below

Want to learn more? Check out some of our courses:

Differences Between Recognizing Emotions in Videos vs. Images

At first, it might seem that detecting emotions in videos is completely unrelated to how you'd detect emotions in images, but that couldn't be further from the truth. Detecting emotions in videos is simply an extension of detecting emotions in images: a video is nothing more than a sequence of images. However, when working with videos, we call these images “frames.” You have probably come across this term earlier, for example, when thinking about the fps of a video: a 30 fps video is a video that contains 30 frames (images) per second. Because videos have so many still images per second, we get the illusion of movement, which also means that the order of the frames is essential.

Taking the this into consideration, we can deduce that there are three steps to detect emotions in videos:

- Recognizing the emotion in each particular frame.

- Choosing a time frame we want to analyze (e.g., the first five seconds of a 30 fps video).

- Assigning each time frame an emotion by looking at the predictions in order (e.g., a time frame of five seconds of a 30 fps video means that you will have 150 predicted emotions, but you will assign a single result for that sequence of 150 predicted emotions).

Types of Emotion Recognition Systems

All emotion recognition systems follow the same basic steps to get to a result, but there are multiple ways to design these systems ranging from low to high complexity.

The simplest, or lowest complexity, way is to perform emotion recognition on each specific image using a Convolutional Neural Network, store the resulting predictions (e.g., in a Pandas DataFrame), and then run a statistical analysis on the results.

A medium complexity solution would also consider how long each emotion is present in a time frame and then select the dominant emotion. However, such a solution doesn't consider how sure the model was in its predictions for each particular frame, which could be relevant to the final prediction we assign to the time frame as a whole.

A high-complexity way of performing emotion recognition in videos is to build a model that performs emotion recognition in videos out of the box. For example, we can create a hybrid model that consists of two networks, a Convolutional Neural Network and a Recurrent Neural Network. These hybrid models are called CNN-RNN models.

I will focus on the simplest approach because it doesn't require a lot of coding and I can use pre-trained models. Additionally, tuning hyperparameters for hybrid models is very difficult.

Remember that, while I’m focused on creating a system that can recognize different people's emotions in videos, you can apply all of the concepts in this article to any classification problem. No matter what you are trying to do, you can always approach working with videos as if you were working with a series of images.

How To Recognize Emotions in Videos

I will perform emotion recognition on the video below using pre-trained models from the FER library, to demonstrate how you can recognize emotions in a video.

After I perform emotion recognition, I will store the result in a Pandas DataFrame. Afterward, I will analyze the results and predict whether the person shows more positive or negative emotions during the video. In a classroom situation, knowing whether the emotions someone displays are generally positive or negative would be enough to gauge whether the students are interested in the lecture.

- Intro to Pandas: What is a Pandas DataFrame and How to Create Them

- Intro to Pandas: How to Analyze Pandas DataFrames

First, I need to import the libraries I plan on using.

# Import necessary libraries

from fer import FER

from fer import Video

import pandas as pdAfter importing the necessary libraries, I’ll load in the pre-trained emotion detector:

# Define pre-trained emotion detector

emotion_detector = FER(mtcnn=True)

Next, I need to specify the path to the video I want to use, and store it inside the "video" variable:

# Define the path to video

path_to_video = "emotion_recognition_test_video.mp4"

# Define video

video = Video(path_to_video)Lastly, I can use the emotion detector to analyze the video:

# Analyze the video and display the output

result = video.analyze(emotion_detector, display=True)By using "display=True," I get the following video displayed as the emotion detector analyzes the video:

It is hard to see which emotions are dominant because the video runs at 24.70 fps, and the model analyzes each frame to give predictions, so I’ll create a Pandas DataFrame that will contain data for each separate frame:

# Create Pandas DataFrame with emotion data

emotions_df = video.to_pandas(result)

emotions_df.head()I will get the following result:

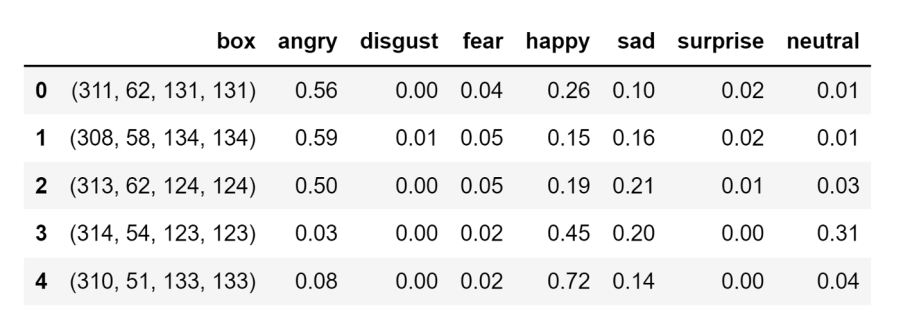

A Pandas DataFrame containing emotion data for each frame of the video.

Image source: Edlitera

The "box" column represents the coordinates of the bounding box that encloses the face of the person in the video, while the other values display how likely a particular emotion is. I can use a simple approach to get a final verdict on how the person feels: I will compare the sum of all “positive emotion” values to the sum of “negative emotion” values for the whole video. Theoretically, I could also take into consideration how big the difference is in particular frames to get an even more accurate result. I will ignore the situations where the model predicted neutral, because those don't give me any useful information on how the person in the video feels.

# Predict whether a person show interest in a topic or not

positive_emotions = sum(emotions_df.happy) + sum(emotions_df.surprise)

negative_emotions = sum(emotions_df.angry) + sum(emotions_df.disgust) + sum(emotions_df.fear) + sum(emotions_df.sad)

if positive_emotions > negative_emotions:

print("Person is interested")

elif positive_emotions < negative_emotions:

print("Person is not interested")

else:

print("Person is neutral")In this case, I get the result:

"Person is interested"This, of course, means that the person mostly displayed positive emotions in the video.

As you see, I was able to perform emotion recognition with just a few lines of code using the FER Python library’s built-in pre-trained model. To return to the lecture example, I have created a system to help teachers improve their lectures by gauging students’ emotions. Knowing how invested your students are in your lecture is arguably one of the most important parts of being a teacher. If they are interested, they are probably getting something out of the lecture.

You can scale up and apply this solution to any classification problem. If you have a video classification problem that is not focused on emotion recognition, you won't be able to use the FER library, but you can easily modify the image classification model we created in the previous article of this series. To repurpose the model for any multiclass classification problem, you can simply change the CSV file containing the names of the images and the labels connected to them. Once the model is trained, you just need to separate a video into frames, feed them to the model, have the model make predictions, store the predictions inside a Pandas DataFrame, and finally, analyze those predictions.

The workflow we used in this article is the same, except that we simplified it by using a pre-trained model. For most use cases, this is a much better solution than building a hybrid model and training it from scratch, because it is much simpler and enables even those unfamiliar with Deep Learning to use AI for emotion recognition. Using a pre-trained model allows us to focus on how we use the model instead of how we create it.